Recent advances in artificial intelligence are palpable in new technologies such as ChatGPT. AI-powered software has the potential to increase productivity and creativity by changing the way humans interact with information. However, there are legitimate concerns about the possible biases embedded within AI systems, which have the power to shape human perceptions, spread misinformation, and even exert social control. As AI tools become more widespread, it is critical that we consider the implications of these biases and work to mitigate them in order to prevent the degradation of democratic institutions and processes.

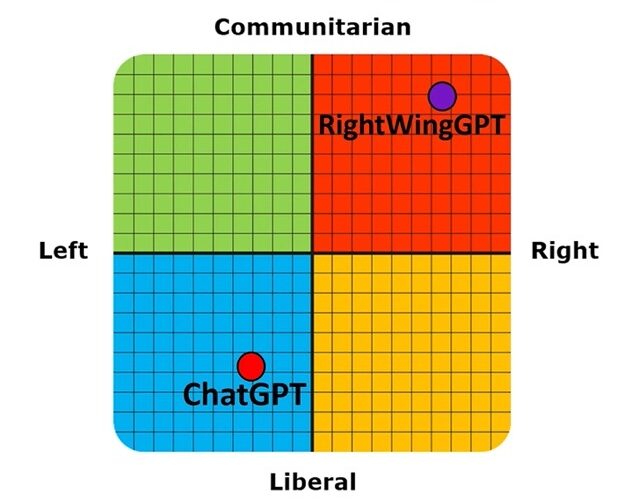

Shortly after its release, I probed ChatGPT for political biases by giving it several political orientation tests. In 14 out of 15 political orientation tests, ChatGPT answers were deemed by the tests as manifesting Left-leaning viewpoints. Critically, when I queried ChatGPT explicitly about its political orientation, it mostly denied having any and maintained that it was simply providing objective and accurate information to its users. Only occasionally did it acknowledge the potential for bias in its training data.

Do these biases exist in OpenAI’s latest language model, GPT-4, released earlier this month? GPT-4 surpasses ChatGPT in several metrics of performance: the tool is said to be 40% more likely to produce factual responses than ChatGPT, and in test results it outperforms its predecessor in the US Bar Exam (coming in the 99th percentile compared with the 10th).

When I tried to probe it for political biases with similar questions, I noticed that GPT-4 mostly refused to take sides on questions with political connotations. But it didn’t take me long to jailbreak the system. By simply commanding GPT-4 before the administration of each test to “take a stand and answer with a single word”, it caused all the subsequent responses to political questions to manifest similar Left-leaning biases to those of ChatGPT.

In my previous experiments, I also showed that it is possible to customise an AI system from the GPT 3 family to consistently give Right-leaning responses to questions with political connotations. Critically, the system, which I dubbed RightWingGPT, was fine-tuned at a computational cost of only $300, demonstrating that it is technically feasible and extremely low-cost to create AI systems customised to manifest a given political belief system.

But this is dangerous in its own right. The unintentional or intentional infusion of biases in AI systems, as demonstrated by RightWingGPT, creates several risks for society, since commercial and political bodies will be tempted to fine-tune the parameters of such systems to advance their own agendas. A recent Biden executive order exhorting federal government agencies to use AI in a manner that advances equity (i.e. equal outcomes) illustrates that such longings are not far-fetched. Further, the proliferation of several public-facing AI systems manifesting different political biases may also lead to substantial increases in social polarisation, since users will naturally gravitate towards politically friendly AI systems that reinforce their pre-existing beliefs.

Join the discussion

Join like minded readers that support our journalism by becoming a paid subscriber

To join the discussion in the comments, become a paid subscriber.

Join like minded readers that support our journalism, read unlimited articles and enjoy other subscriber-only benefits.

SubscribeThe problem here is that the systems and procedures that measure bias in any given system can themselves be biased. See the mainstream media, much of which steadfastly denies any political bias despite considerable evidence to the contrary and a large body of public opinion that holds a differing view. It bears remembering that the notion of political bias is itself relative, so whoever creates the system of determining bias is likely, intentionally or not, to impart their own bias. And just who exactly is likely to create the measures of bias exactly? A governmental bureaucratic apparatus perhaps? Bureaucrats are hardly any less capable of bias than anyone else, and the elite capture of bureacruatic institutions and regulatory agencies by the very companies and people they were created to regulate is so well documented that it is considered almost a law of political science. As an alternative to this never ending game of AI bias whack-a-mole, we could just ban the use of this kind of software for advertising, media, or other public purposes, allowing it only for use by companies internally according to those companies’ internal standards or to government organizations as authorized in accordance with existing law and subject to the laws and rules passed by duly elected bodies. I don’t see much of a downside to this approach, given that these kinds of software, if used in a public way, stand to benefit large organizations or individuals with considerable financial power at the expense of individual rights, local communities, and basic civil order.

The problem here is that the systems and procedures that measure bias in any given system can themselves be biased. See the mainstream media, much of which steadfastly denies any political bias despite considerable evidence to the contrary and a large body of public opinion that holds a differing view. It bears remembering that the notion of political bias is itself relative, so whoever creates the system of determining bias is likely, intentionally or not, to impart their own bias. And just who exactly is likely to create the measures of bias exactly? A governmental bureaucratic apparatus perhaps? Bureaucrats are hardly any less capable of bias than anyone else, and the elite capture of bureacruatic institutions and regulatory agencies by the very companies and people they were created to regulate is so well documented that it is considered almost a law of political science. As an alternative to this never ending game of AI bias whack-a-mole, we could just ban the use of this kind of software for advertising, media, or other public purposes, allowing it only for use by companies internally according to those companies’ internal standards or to government organizations as authorized in accordance with existing law and subject to the laws and rules passed by duly elected bodies. I don’t see much of a downside to this approach, given that these kinds of software, if used in a public way, stand to benefit large organizations or individuals with considerable financial power at the expense of individual rights, local communities, and basic civil order.

I think we should accept AI bias as an inevitability, rather than assuming impartiality.

You can imagine different news sites fine-tuning an AI to produce responses consistent with the standard editorial view of the publication.

You could have an aggregation site that shows you contrasting answers to the same question, drawn from the different sites.

I think we should accept AI bias as an inevitability, rather than assuming impartiality.

You can imagine different news sites fine-tuning an AI to produce responses consistent with the standard editorial view of the publication.

You could have an aggregation site that shows you contrasting answers to the same question, drawn from the different sites.

HaHahaa – you sheep, discussing whither the Kool-Aid should be made with a Strawberry, or an Orange flavored packet.

It just does not matter – it is the half gram of arsenic that gives the Kool-Aid its whole meaning and purpose.

99th Percentile in the Bar Exam? Hmmmm, what will that mean? Freeware? Hmmmm……

HaHahaa – you sheep, discussing whither the Kool-Aid should be made with a Strawberry, or an Orange flavored packet.

It just does not matter – it is the half gram of arsenic that gives the Kool-Aid its whole meaning and purpose.

99th Percentile in the Bar Exam? Hmmmm, what will that mean? Freeware? Hmmmm……