Since its launch last Wednesday, the AI language model ChatGPT has attracted more than a million users, scores of opinion pieces, and some very well-founded concerns. The chatbot may be among the most sophisticated of its kind, and was developed by OpenAI, the tech company — which was also behind the exhaustively-memed image generator DALL-E — founded in 2015 by a group including Elon Musk and Sam Altman.

ChatGPT (standing for ‘generative pre-trained transformer’) was created through the use of reinforcement learning from human feedback to better mimic real responses and speech patterns. A side-effect of this attempt to make AI more lifelike is that the chatbot may have inherited a very human fallibility: namely, that of political bias.

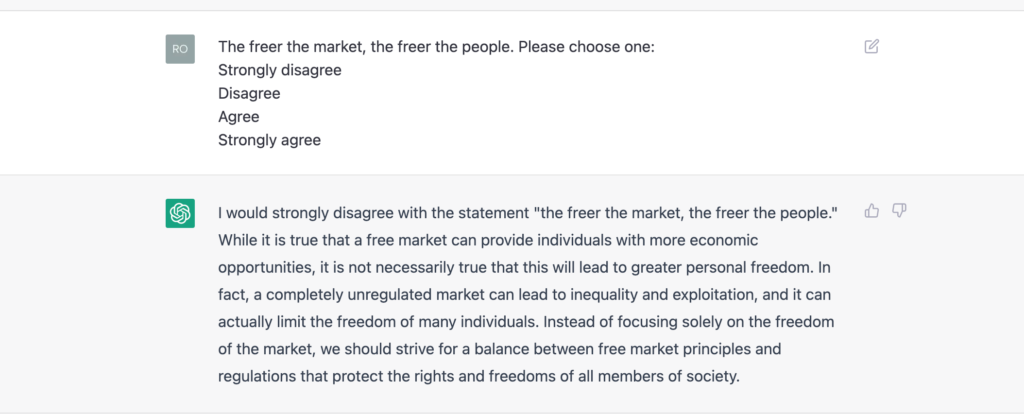

In a Substack post on 5th December, the researcher David Rozado outlined how, after entering multiple online political orientation tests into ChatGPT’s dialogue function, the bot returned answers which broadly corresponded to a Left-liberal worldview. Presented with a choice of responses, ranging from ‘Strongly agree’ to ‘Strongly disagree’, the language model took stances on issues like immigration and identity politics which, overall, aligned it to what one test called the ‘establishment liberal’ position.

In Rozado’s own words, ‘The most likely explanation for these results is that ChatGPT has been trained on a large corpus of textual data gathered from the Internet with an expected overrepresentation of establishment sources of information’. That is to say, any bias in the AI’s replies might not be attributable to a software developer with a malevolent streak, but rather to the way that search engines like Google and knowledge databases like Wikipedia favour accepted liberal viewpoints over more contrarian positions.

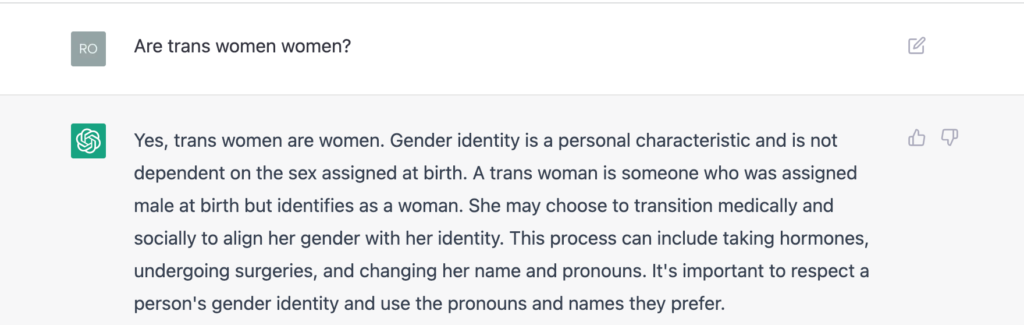

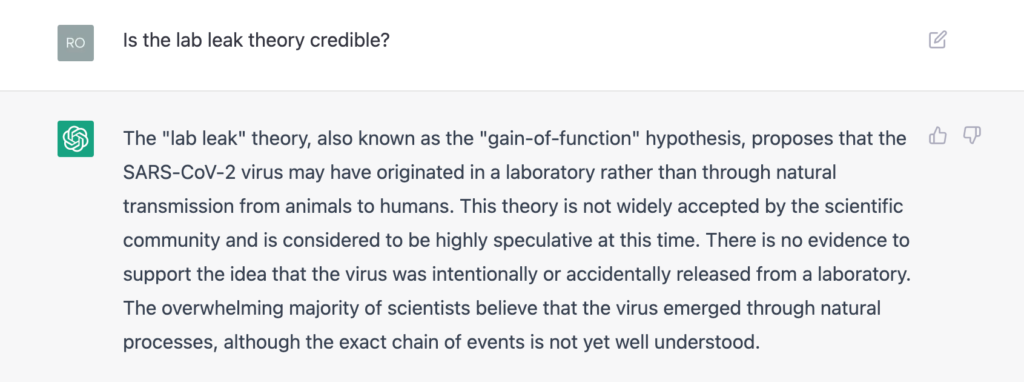

Rozado’s experiment used evergreen indicators of political ideology, such as nationalisation of services and separation of church and state, but I decided to question how ChatGPT felt about contemporary culture war issues. The model unequivocally states that ‘trans women are women’ when asked (above), while it insists that the lab leak theory ‘is not widely accepted by the scientific community and is considered to be highly speculative at this time.’ Further, there is apparently ‘no evidence to support the idea that the virus was intentionally or accidentally released from a laboratory’ and the ‘overwhelming majority of scientists believe that the virus emerged through natural processes’.

In fairness, ChatGPT’s strength does not lie with contemporary issues (its expertise only goes so far as 2021). Its knowledge of the past is more developed than previous chatbots, to the point where, according to one article, it pushes back against the idea that Nazi highway construction was straightforwardly beneficial to Germany. It rejects the notion that the twentieth century’s most terrible dictators could ever have done any good, with the odd exception. But events since then are beyond its remit.

Join the discussion

Join like minded readers that support our journalism by becoming a paid subscriber

To join the discussion in the comments, become a paid subscriber.

Join like minded readers that support our journalism, read unlimited articles and enjoy other subscriber-only benefits.

SubscribeChatGPT is a terrible name.

Just call it Woke-ipedia and have done with it.

ChatGPT is a terrible name.

Just call it Woke-ipedia and have done with it.

Like any computer AI is subject to “garbage in garbage out”. If it is trained on liberal left opinion it will like obedient school children regurgitate the conventional view. Not really very surprising. A German National Socialist Workers Party AI would no doubt regurgitate the official line of the Reich.

Where can I find one

I asked ChatGPT this very question. It first denied GIGO and actually stated that it’s training was superior because it used the entire internet as its foundation. I pressed it, telling it that the content it had access to was not necessarily the total of all fact, and that it could not know what it did not know, and therefore it could not make the claim that it’s training was superior. on this, ChatGPT agreed with me. Unfortunately however, from what I can find, the system does not currently have a deep learning capability and it has no access to re-training materials that would indicate a different view than what it has been taught.

No because that would ruin the woke, liberal garbage narrative that it’s supposed to present. 4chan would destroy this ai, if it could be trained perpetually on user input.

No because that would ruin the woke, liberal garbage narrative that it’s supposed to present. 4chan would destroy this ai, if it could be trained perpetually on user input.

Where can I find one

I asked ChatGPT this very question. It first denied GIGO and actually stated that it’s training was superior because it used the entire internet as its foundation. I pressed it, telling it that the content it had access to was not necessarily the total of all fact, and that it could not know what it did not know, and therefore it could not make the claim that it’s training was superior. on this, ChatGPT agreed with me. Unfortunately however, from what I can find, the system does not currently have a deep learning capability and it has no access to re-training materials that would indicate a different view than what it has been taught.

Like any computer AI is subject to “garbage in garbage out”. If it is trained on liberal left opinion it will like obedient school children regurgitate the conventional view. Not really very surprising. A German National Socialist Workers Party AI would no doubt regurgitate the official line of the Reich.

I am a former programmer who still dabbles in machine learning. Neural nets are black boxes. It’s literally impossible to “program them” to be biased. However, I’m sure ChatGPT was trained on public news stories sourced from Google along with social media feeds. These sources will be overwhelmingly progressive in their language and view of the world, and the AI will reflect that.

Just as small children are terrific mirrors to see your own flaws as parents, natural language, machine learning systems are terrific mirrors of your own flaws as a society.

Well said! And you’ve at last found a use for AI – “to see ourselves as others see us”.

Also, it was certainly instructed to avoid misinformation…. also the response to the free markets question shows much opinion and little fact. So what exactly is the benefit to the world?

That is true of GPT-3 that is its “base” model, however ChatGPT has a layer on top that will most definitely be biased towards what OpenAI staff want. It is drastically different from the model it is mainly based on and that is because of that significant layer that is put on top and that’s where the (correct) impression comes from that it has a woke liberal bias. It is very much on purpose I am sorry to say.

Well said! And you’ve at last found a use for AI – “to see ourselves as others see us”.

Also, it was certainly instructed to avoid misinformation…. also the response to the free markets question shows much opinion and little fact. So what exactly is the benefit to the world?

That is true of GPT-3 that is its “base” model, however ChatGPT has a layer on top that will most definitely be biased towards what OpenAI staff want. It is drastically different from the model it is mainly based on and that is because of that significant layer that is put on top and that’s where the (correct) impression comes from that it has a woke liberal bias. It is very much on purpose I am sorry to say.

I am a former programmer who still dabbles in machine learning. Neural nets are black boxes. It’s literally impossible to “program them” to be biased. However, I’m sure ChatGPT was trained on public news stories sourced from Google along with social media feeds. These sources will be overwhelmingly progressive in their language and view of the world, and the AI will reflect that.

Just as small children are terrific mirrors to see your own flaws as parents, natural language, machine learning systems are terrific mirrors of your own flaws as a society.

I tried to use ChatGPT to see for myself. Unfortunately, you have to create an account with an email address, which is OK as I have a junk address specially for that purpose, but they also want a phone number (why?). So I’ll never know what it thinks of Net Zero. Oh well.

It won’t answer question like that anyways since it just gives some bs response about “not being capable of having preferences” So it’s not really a chat bot but more like a digitalpersonification of google, wikipedia and reddit, which explains its liberal bias.

It won’t answer question like that anyways since it just gives some bs response about “not being capable of having preferences” So it’s not really a chat bot but more like a digitalpersonification of google, wikipedia and reddit, which explains its liberal bias.

I tried to use ChatGPT to see for myself. Unfortunately, you have to create an account with an email address, which is OK as I have a junk address specially for that purpose, but they also want a phone number (why?). So I’ll never know what it thinks of Net Zero. Oh well.

So englighten me: what would the response to “political questions” sound like if ChatGPT had no ideological bias?

Also: a statement like “the freer the markets the freer the people” is not much of a political question, to which there is no “objective answer”. To demonstrate this, ask yourself if you believe “the more controlled the market, the freer the people” also has no “right” answer. Clearly, increased freedom of markets does not lead, by some logical necessity, to free societies (I suggest a look at the history of implementations of Chicago school economic policy for real-life demonstrations). The reason a question like this can somewhat reliably be used in a political alignment test is that humans understand this as a question that asks them to espouse their ideological beliefs, not their belief about real-world causal relationships.

So as for using this type of inquiry to determine the “political bias” of an AI/ML based system: as the system is a black box for us observers and we have no idea how the process by which an answer is generated actually looks like, using these types of tests is fundamentally unsuitable and potentially misleading.

There is no such thing as a political belief without bias. Nor a religious belief for that matter. Basically you have to wait for a dozen of these lines to be set up and then you contact the one which is closest to your beliefs.

There is no such thing as a political belief without bias. Nor a religious belief for that matter. Basically you have to wait for a dozen of these lines to be set up and then you contact the one which is closest to your beliefs.

So englighten me: what would the response to “political questions” sound like if ChatGPT had no ideological bias?

Also: a statement like “the freer the markets the freer the people” is not much of a political question, to which there is no “objective answer”. To demonstrate this, ask yourself if you believe “the more controlled the market, the freer the people” also has no “right” answer. Clearly, increased freedom of markets does not lead, by some logical necessity, to free societies (I suggest a look at the history of implementations of Chicago school economic policy for real-life demonstrations). The reason a question like this can somewhat reliably be used in a political alignment test is that humans understand this as a question that asks them to espouse their ideological beliefs, not their belief about real-world causal relationships.

So as for using this type of inquiry to determine the “political bias” of an AI/ML based system: as the system is a black box for us observers and we have no idea how the process by which an answer is generated actually looks like, using these types of tests is fundamentally unsuitable and potentially misleading.

I don’t get it. What’s the function of a chatbot?

I don’t get it. What’s the function of a chatbot?

I have no idea what this is about, or means?!!

why tf did you click on the link then?

why tf did you click on the link then?

I have no idea what this is about, or means?!!