It can be a lonely and unrewarding road, trying to talk about how we define goodness, and what it takes to develop virtue. Outside university seminar rooms these subjects get short-shrift in our noisy, events-led public conversation. So I’ve been pleased to see the emerging field of AI ethics bump them, if not to the top of the agenda, at least onto it.

Goodness-talk, in our deeply diverse society, is usually written off in one of two ways. It either sounds insubstantial and naïve, a pastel-coloured pop-gun in a red-clawed world. Only those too ‘nice’ to understand reality would bother. Alternatively, it seems like judgemental finger-pointing with a side helping of hypocrisy. The charge of “virtue signalling” covers both. We distrust the motives of the messenger, and the message by association.

Around the subject of AI, however, the live and urgent questions of a fast moving, ultra-powerful field are forcing open space for these deeper things. No longer a dusty overlooked backwater of philosophy, ethics professors are suddenly in demand, asked to solve the problem of how to make machines, if not good, at least (in the famous words of the original Google motto), not evil.

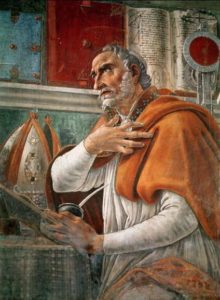

Ethics has long been classified into different schools: utilitarian (harm reduction) and consequentialist (only the outcome, not the motive matters) schools see the world quite differently from virtue ethics. Drawing on Aristotle and Augustine, this last one has tended to be where religious ethics land — not, as in the caricature, keeping a ledger of rights and wrongs on some eternal scoreboard, but focusing on what kind of people we are becoming. (See James Mumford’s essay on Terrence Malick for more detail on this).

The AI ethics field is currently mainly circling round the first two; consequentialist risk management and utilitarian calculus. According to tech writer Tom Chatfield, there is a global consensus emerging around five ethical principles: transparency, justice and fairness, nonmaleficence, responsibility, and privacy.

All of these are laudable, but except justice, none are recognisable as virtues. It is clear that machines can learn better and faster than we can, and in our engagement with them we are ourselves changed. I wonder if there is an opportunity here to help us think through who we, not just the machines we rely on, are becoming?

Why not add a more distinctive virtue ethics strand to the conversation? What might virtual virtues be? Could courage, or love, or patience, be part of the ‘ethical data’ we are feeding them? An algorithmic solution to the problem of the human heart seems unlikely of course, but in the attempt, we might just grow closer to the good ourselves.

Join the discussion

Join like minded readers that support our journalism by becoming a paid subscriber

To join the discussion in the comments, become a paid subscriber.

Join like minded readers that support our journalism, read unlimited articles and enjoy other subscriber-only benefits.

Subscribe