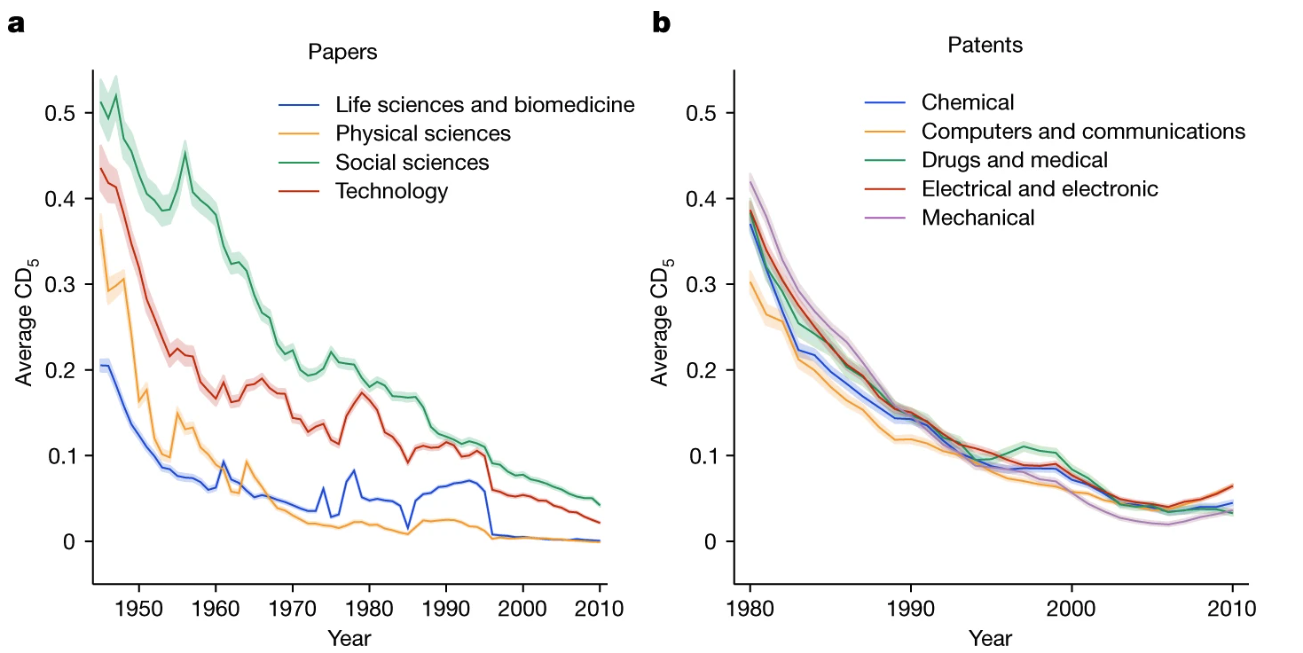

A new paper published this week provides evidence that the progress of science has considerably slowed over the course of the last few decades. The research — authored by American academics Michael Park, Erin Leahy and Russell J. Funk — builds on a previous study, ‘Are ideas getting harder to find?’, which claims that “research effort is rising substantially while research productivity is declining sharply”.

The findings of the Nature report go against our expectations of scientific research as a process in which prior knowledge facilitates new discoveries, and where disciplines endlessly branch out into further sub-disciplines. Park, Leahy and Funk write that “relative to earlier eras, recent papers and patents do less to push science and technology in new directions”, and attribute the “decline in disruptiveness to a narrowing in the use of previous knowledge”.

This is to say, a larger volume of material is being produced but scientific research is becoming increasingly specialised, to the point of esotericism and to the detriment of significant advances. What’s more, the Nature paper finds that recent studies are “less likely to connect disparate areas of knowledge”. Using data from 45 million papers and 3.9 million patents, it provides examples of pharmaceuticals and semiconductors as areas of study which are regressing.

The authors note that the decline in disruptive research is not so much due to changes in the quality of published science. Rather, it “may reflect a fundamental shift in the nature of science and technology.”

This shift, naturally, will have an impact beyond the sometimes insular world of scientific study. The researchers claim that the “gap between the year of discovery and the awarding of a Nobel Prize has also increased, suggesting that today’s contributions do not measure up to the past”. The implications of this are damaging to the development of health and security policy, and to economic progress more broadly.

How, then, to combat this stasis, and guarantee more significant breakthroughs in medicine, engineering and climate science? The report suggests that scientists should broaden their scope of reference, to ensure cross-disciplinary collaboration, as well as prioritising quality of research over quantity of output, potentially through taking extended sabbaticals.

Working off the idea that “using more diverse work, less of one’s own work and older work tends to be associated with the production of more disruptive science and technology”, the authors recommend that scientists avoid the pitfalls of contemporary group-think and self-reference, and more productively draw on the discoveries of the past.

Join the discussion

Join like minded readers that support our journalism by becoming a paid subscriber

To join the discussion in the comments, become a paid subscriber.

Join like minded readers that support our journalism, read unlimited articles and enjoy other subscriber-only benefits.

Subscribe