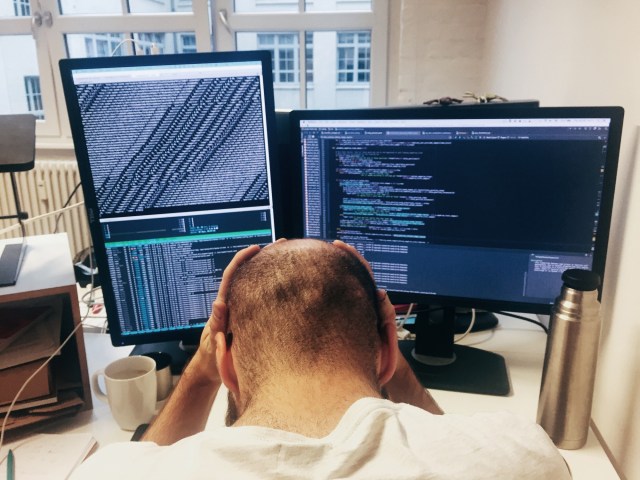

The more complex the machine, the more ways to screw it up. Credit: Adam Kuylenstierna / EyeEm

Stephen Hawking. Elon Musk. Vladimir Putin. What do those gents have in common?

The answer is that they’re all worried about Artificial Intelligence (AI).

Whoever masters AI will control the world, says Putin. Alternatively, our artificially intelligent systems may get ideas above their station and do the whole world-domination thing for themselves.

In a brilliantly informative long read for the Atlantic, James Somers has a more immediate concern. He believes that software has already taken over the world. More and more of our systems, from cars to power stations to military networks are controlled by the stuff. The trouble is that we’re not fully in control of it – not because it’s developed a mind of its own, but because it’s a mess.

Somers draws a contrast between software and the non-computerised hardware that we used to rely on (and in many cases still do):

“Our standard framework for thinking about engineering failures—reflected, for instance, in regulations for medical devices—was developed shortly after World War II, before the advent of software, for electromechanical systems.”

“When we had electromechanical systems, we used to be able to test them exhaustively,” says Nancy Leveson, a professor of aeronautics and astronautics at the Massachusetts Institute of Technology who has been studying software safety for 35 years… ‘We used to be able to think through all the things it could do, all the states it could get into.’”

Even the most complicated machines of the pre-digital age had a limited number of configurations, each of which could be tested. Mechanical devices could be tweaked and tinkered with, thereby adding further possibilities for malfunction; but, even then, the physical nature of the mechanism placed constraints on the extent to which we can mess around with it:

“Software is different. Just by editing the text in a file somewhere, the same hunk of silicon can become an autopilot or an inventory-control system. This flexibility is software’s miracle, and its curse. Because it can be changed cheaply, software is constantly changed; and because it’s unmoored from anything physical—a program that is a thousand times more complex than another takes up the same actual space—it tends to grow without bound. ‘The problem,’ Leveson wrote in a book, ‘is that we are attempting to build systems that are beyond our ability to intellectually manage.’”

For purposes of amusement, artists sometimes design absurdly over-complicated mechanisms – using as many components as possible to do as little as possible. Named after William Heath Robinson (in Britain) or Rube Goldberg (in America), such machines are rarely built – except as the occasional joke. When it comes to serious engineering, over-elaboration is not only inefficient, it is also easy to spot – and so gets designed out.

Software is more vulnerable, though, because over-elaboration can more easily go unnoticed. Compounding the problem is that software engineers, unlike mechanical engineers, aren’t looking directly at the thing they’re building:

“Why [is] it so hard to learn to program? The essential problem [seems] to be that code [is] so abstract. Writing software [is] not like making a bridge out of popsicle sticks, where you [can] see the sticks and touch the glue. To ‘make’ a program, you [type] words. When you [want] to change the behavior of the program, be it a game, or a website, or a simulation of physics, what you actually [change is] text.”

If you ever wondered why so many government IT projects end in failure, consider all of the above. Software allows endless tinkering by a limitless number of individuals, none of whom have a clear view of the real world or the bigger picture.

What could possibly go wrong?

Join the discussion

Join like minded readers that support our journalism by becoming a paid subscriber

To join the discussion in the comments, become a paid subscriber.

Join like minded readers that support our journalism, read unlimited articles and enjoy other subscriber-only benefits.

Subscribe