In what has been a bleak year for Silicon Valley, the sudden surge in the value of tech company Nvidia, driven by its mastery of chips used for artificial intelligence, may seem like a ray of hope. Yet if this success may reward the firm’s owners and employees, as well as the tech-oriented financial speculators, the blessings may not rebound so well to the industry’s workforce overall, or to the broader interests of the West.

Nvidia’s rise as the first trillion-dollar semiconductor firm reinforces the de-industrialisation of the tech economy. Unlike the traditional market leaders, like Intel, Nvidia does not manufacture its own chips, choosing instead to rely largely on the expertise of Taiwanese semiconductors. It has limited blue-collar employment. Intel, a big manufacturer, has 120,000 employees — more than four times as many as the more highly valued Nvidia, which epitomises the increasingly non-material character of the Valley.

The company’s value has been tied directly to the profitable, if socially ruinous, expansion of digital media, notably video games and now artificial intelligence. AI is the new crack cocaine of the digital age, with the power to lure people into an ever more artificial environment while providing a substitute for original human thinking and creativity. But it is enormously promising as a potential teaching tool (perhaps obviating the need for professors) and in areas such as law enforcement.

Industry boosters see Nvidia’s rise as straightforwardly good, and as a sign of continued American dominance of the chip industry. They talk boldly of seizing the “high ground” while competitors take over all the basic tasks of actually making things. But as we saw in cars, consumer electronics and the making of cell phones, America’s key competitors — China, South Korea and Japan — are not likely to be satisfied with being hod carriers to the luminaries of Silicon Valley. China’s stated goal is not to partner but to dominate AI, with all the power it gives the country to control both consumer products and people’s minds, not to mention bolstering its military supremacy. This process will be greatly aided by the takeover or total intimidation of Taiwan, leaving Nvidia and other “fabless” chip firms increasingly at Beijing’s mercy.

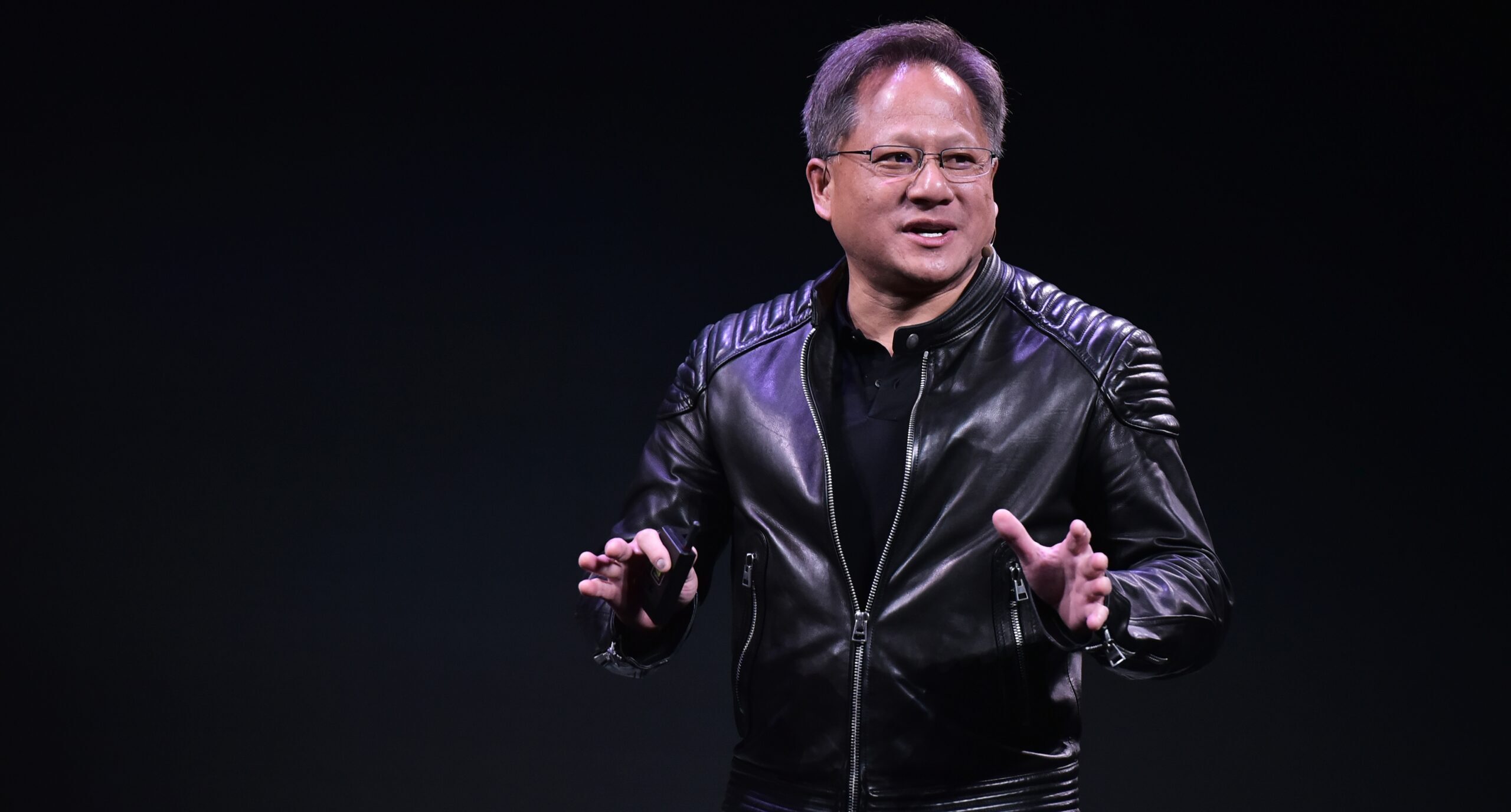

Nvidia’s CEO, Jensen Huang, seems enthused about serving the Chinese market. He believes the Middle Kingdom is in position to dominate the tech economy. Not surprisingly, he bristles against attempts by Congress and the Biden White House to curb sales of advanced chips to China. “There is nothing that is slowing down China’s development of technology. Nothing. They don’t need any more inspirations,” Huang remarked recently. “China is full steam ahead.” Given this assumption, it’s logical that Nvidia wants free rein to sell its latest and greatest to Beijing, without much in the way of restriction.

How this benefits humanity, or the cause of democracy, seems dubious but, like much of Silicon Valley, Nvidia thinks little about anything as trivial as the national interest or human rights. The tech oligarchs seem more than willing to sell the instruments of our own undoing for a pretty penny. Nor, given its post-industrial structure, does the company’s rise promise much for most Americans who need better opportunities. Besides, it’s unlikely that the super-geeks at organisations like Nvidia face any prospect of unemployment; even now, sales of luxury houses in places like Portola Valley are still reaching new heights.

Some may see the rise of Nvidia and AI as the harbinger of a technological utopia. What’s more likely is that it’s just one more step on the road to a deadening techno-feudalism and the emergence of an autocratic world system centred on China.

Join the discussion

Join like minded readers that support our journalism by becoming a paid subscriber

To join the discussion in the comments, become a paid subscriber.

Join like minded readers that support our journalism, read unlimited articles and enjoy other subscriber-only benefits.

Subscribe