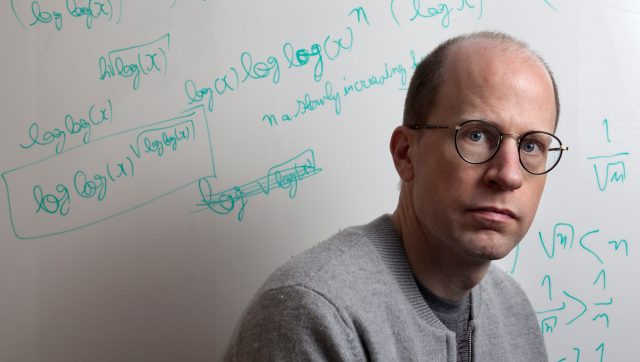

How worried should we be? (Tom Pilston for The Washington Post via Getty Images)

In the last year, artificial intelligence has progressed from a science-fiction fantasy to an impending reality. We can see its power in everything from online gadgets to whispers of a new, “post-singularity” tech frontier — as well as in renewed fears of an AI takeover.

One intellectual who anticipated these developments decades ago is Nick Bostrom, a Swedish philosopher at Oxford University and director of its Future of Humanity Institute. He joined UnHerd’s Florence Read to discuss the AI era, how governments might exploit its power for surveillance, and the possibility of human extinction.

Florence Read: You’re particularly well-known for your work on “existential risk” — what do you mean by that?

Nick Bostrom: The concept of existential risk refers to ways that the human story could end prematurely. That might mean literal extinction. But it could also mean getting ourselves permanently locked into some radically suboptimal state, that could either collapse, or you could imagine some kind of global totalitarian surveillance dystopia that you could never overthrow. If it were sufficiently bad, that could also count as an existential catastrophe. Now, as for collapse scenarios, many of those might not be existential catastrophes, because civilisations have risen and fallen, empires have come and gone and eventually. If our own contemporary civilisation totally collapsed, perhaps out of the ashes would eventually rise another civilisation hundreds or thousands of years from now. So for something to be an existential catastrophe it would not just have to be bad, but have some sort of indefinite longevity.

FR: It might be too extreme, but to many people it feels that a state of semi-anarchy has already descended.

NB: I think there has been a general sense in the last few years that the wheels are coming off, and institutional processes and long-term trends that were previously taken for granted can no longer be relied upon. Like that there are going to be fewer wars every year, or that the education system is gradually improving. The faith people had in those assumptions has been shaken over the last five years or so.

FR: You’ve written a great deal about how we need to learn from each existential threat as we move forward, so that next time when it becomes more severe or more intelligent or more sophisticated, we can cope. And that specifically, of course, relates to artificial intelligence.

NB: It’s quite striking how radically the public discourse on this has shifted, even just in the last six to 12 months. Having been involved in the field for a long time, there were people working on it but broadly, in society, it was more viewed as science-fiction speculation, not as a mainstream concern, and certainly nothing that top-level policymakers would have been concerned with. But in the UK we’ve recently had this Global AI Summit, and the White House just came out with executive orders. There’s been quite a lot of talk, including about potential existential risks from AI as well as more near-term issues, and that is kind of striking.

I think that technical progress is really what has been primarily responsible for this. People saw for themselves — with GPT-3, then GPT-3.5 and GPT-4 — how much this technology has improved.

FR: How close are we to something that you might consider the singularity or AGI that does actually supersede any human control over it?

NB: There is no obvious clear barrier that would necessarily prevent systems next year or the year after from reaching this level. It doesn’t mean that that’s the most likely scenario. But we don’t know what happens as you scale GPT-4 to GPT-5. But we know that when you scaled it from GPT-3 to GPT-4 it unlocked new abilities. There is also this phenomenon of “grokking”. So initially, you try to teach the AI some tasks, and it’s too hard. Maybe it gets slightly better over time because it memorises more and more specific instances of the problem, but that’s the hard, sluggish way of learning to do something. But then at some point, it kind of gets it. Once it has enough neurons in its brain or has seen enough examples, it sort of sees the underlying principle, or develops the right higher-level concept that enables it to suddenly have a rapid spike in performance.

FR: You write about the idea that we have to begin to teach AI a set of values by which it will function, if we have any hope of maintaining its benefit for humanity in the long term. And one of the liberal values that has been called into question when it comes to AI is freedom of speech. There have been examples of AI effectively censoring information, or filtering information that is available on a platform. Do you think that there is a genuine threat to freedom or a totalitarian impulse built into some of these systems that we’re going to see extended and exaggerated further down the line?

NB: I think AI is likely to greatly increase the ability of centralised powers to keep track of what people are thinking and saying. We’ve already had, for a couple of decades, the ability to collect huge amounts of information. You can eavesdrop on people’s phone calls or social-media postings — and it turns out governments do that. But what can you do with that information? So far, not that much. You can map out the network of who is talking to whom. And then, if there is a particular individual of concern, you could assign some analyst to read through their emails.

With AI technology, you could simultaneously analyse everybody’s political opinions in a sophisticated way, using sentiment analysis. You could probably form a pretty good idea of what each citizen thinks of the government or the current leader if you had access to their communications. So you could have a kind of mass manipulation, but instead of sending out one campaign message to everybody, you could have customised persuasion messages for each individual. And then, of course, you can combine that with physical surveillance systems like facial recognition, gait recognition and credit card information. If you imagine all of this information feeding into one giant model, I think you will have a pretty good idea of what each person is up to, what and who they know, but also what they are thinking and intending to do.

If you have some sufficiently powerful regime in place, it might then implement these measures and then, perhaps, make itself immune to overthrow.

FR: Do you think the rise in hyper-realistic propaganda — deep-fake videos, which AI is going to make possible in the coming years — will coincide with the rise in generalised scepticism in Western societies?

NB: I think in principle a society could adjust to it. But I think it will come at the same time as a whole bunch of other things: automated persuasion bots for instance, social companions built from these large language models and then with visual components that might be very compelling and addictive. And then also mass surveillance, mass potential censorship or propaganda.

FR: We’re talking about a tyrannical government that uses AI to surveil its citizens — but is there an innate moral component to the AI itself? Is there a chance that an AGI model could in some way become a bad actor on its own without human intervention?

NB: There are a bunch of different concerns that one might have as we move towards increasingly powerful AI tools and there are completely unnecessary feuds that people have between them. “Well, I think concern X should be taken seriously,” and somebody else says “I think concern Y should be taken seriously.” People love to form tribes and to beat one another, but X, Y, Z and B and W need to be taken into account. But yes, you’re right that there is also the separate alignment problem which is: with an arbitrarily powerful AI system, how can you make sure that it does what the people building it intend it to do?

FR: And this is where it’s about building in certain principles, an ethical code, into the system — is that the way of mitigating that risk?

NB: Yes, or being able to steer it basically. It’s a separate question of where you do steer it — if you build in some principle or goal — which goal or which principle? But even just having the ability to point it towards any particular outcome you want, or a set of principles you want it to follow — that is a difficult technical problem. And in particular, what is hard is to figure out if the way we would do that would continue to work even if the AI system became smarter than us and perhaps eventually super-intelligent. If, at that point, we are no longer able to understand what it is doing or why it is doing it, or what’s going on inside its brain, we still want an original scaling method to keep working to arbitrarily high levels of intelligence. And we might need to get that right on the first try.

FR: How do we do that with such incredible levels of dispute and ideological schism across the world?

NB: Even if it’s toothless, we should make an affirmation of the general principle that ultimately AI should be for the benefit of all sentient life. If we’re talking about a transition to the super-intelligence era, all humans will be exposed to some of the risk, whether they want it or not. And so it seems fair that all should also stand to have some slice of the upside if it goes well. And those principles should go beyond all currently existing humans and include, for example, animals that we are treating very badly in many cases today, but also some of the digital minds themselves that might become moral subjects. As of right now, all we might hope for is some general, vague principle, and then that can sort of be firmed up as we go along.

Another hope, and some recent progress has been made on this, is for the next-generation systems to be tested prior to deployment to check that they don’t lend themselves to people who would want to make biological weapons of mass destruction or commit cybercrime. And so far AI companies have done some voluntary work on this: Open AI, before releasing GPT-4, had the technology for around half a year and did red-teaming exercises too. Research on technical AI alignment would be good to solve the problem of scalable alignment before we have super-intelligence.

I think the whole area of the moral status of digital mind will require more attention. I think it needs to start to migrate from a philosophy seminar topic to a serious mainstream issue. We don’t want to have a future where the majority of sentient minds or digital minds are horribly oppressed and we’re like pigs in Animal Farm. That would be one way of creating a dystopia. And it’s going to be a big challenge, because it’s already hard for us to extend empathy sufficiently to animals, even though animals have eyes and faces and can squeak.

Incidentally, I think there might be grounds for moral status besides sentience. I think if somebody can suffer, that might be sufficient to give them moral status. But I think even if you thought they were not conscious but they had goals, a conception of self, the sense of an entity persisting through time, the ability to enter into reciprocal relationships with other beings and humans — that might also ground various forms of moral status.

FR: We’ve talked a lot about the risks of AI, but what are its potential upsides? What would be the best case scenario?

NB: I think the upsides are enormous. In fact, it would be tragic if we never developed advanced artificial intelligence. I think all the paths to really great futures ultimately lead through the development of machine super-intelligence. But the actual transition itself will be associated with major risks, and we need to be super-careful to get that right. But I’ve started slightly worrying now in the last year or so that we might overshoot with this increase in attention to the risks and downsides. It still seems unlikely, but less unlikely than it did a year ago, that we might get to the point of a permafrost, some situation where it is never developed.

FR: A kind of AI nihilism?

NB: Yes, where it becomes so stigmatised that it just becomes impossible for anybody to say anything positive about it. There may pretty much be a permanent ban on AI. I think that could be very bad. I still think we need to have a greater level of concern than we currently have. But I would want us to reach the optimal level of concern and stop there.

FR: Like a Goldilocks level of fear for AI.

NB: People like to move in herds, and I worry about it becoming a big stampede to say negative things about AI, and then destroying the future in that way. We could go extinct through some other method instead, maybe synthetic biology, without even ever getting to at least roll the die with AI.

I would think that, actually, the optimal level of concern is slightly greater than what we currently have, and I still think there should be more concern. It’s more dangerous than most people have realised. But I’m just starting to worry about overshooting, the conclusion being: let’s wait for a thousand years before we develop it. Then of course, it’s unlikely that our civilisation will remain on track for a thousand years.

FR: So we’re damned if we do and damned if we don’t?

NB: We will hopefully be fine either way, but I think I would like the AI before some radical biotech revolution. If you think about it this way: if you first get some sort of super-advanced synthetic biology, that might kill us, but if we’re lucky, we survive it, and then maybe invent some super-advanced molecular nanotechnology and that might kill us, but if we’re lucky we survive that, and then you do the AI, and then maybe that will kill us. Or, if we’re lucky, we survive that and we get utopia. Well, then you have to get through three separate existential risk, like first a biotech risk, plus the nanotech risk, plus the AI risks.

Whereas if we get AI first, maybe that will kill us, but if not, we get through that and then I think that will handle the biotech and nanotech risks. And so the total amount of existential risk on that second trajectory would be less than on the former. Now, it’s more complicated than that, because we need some time to prepare for the Ay, but you can start to think about optimal trajectories rather than a very simplistic binary question of: “Is technology X good or bad?” We should be thinking, on the margin, “Which ones should we try to accelerate and which ones retard?”

FR: Do you have existential angst? Does this play on your mind late at night?

NB: It is weird. If this worldview is even remotely correct, that we should happen to be alive at this particular point in human history — so close to this fulcrum or nexus on which the giant future of earth-originating intelligent life might hinge — out of all the different people that have lived throughout history, people that might come later if things go well: that one should sit so close to this critical juncture, that seems a bit too much of a coincidence. And then you’re led to these questions about the simulation hypothesis, and so on. I think there is more in heaven and on earth than is dreamed of in our philosophy and that we understand quite little about how all of these pieces fit together.

Join the discussion

Join like minded readers that support our journalism by becoming a paid subscriber

To join the discussion in the comments, become a paid subscriber.

Join like minded readers that support our journalism, read unlimited articles and enjoy other subscriber-only benefits.

Subscribe