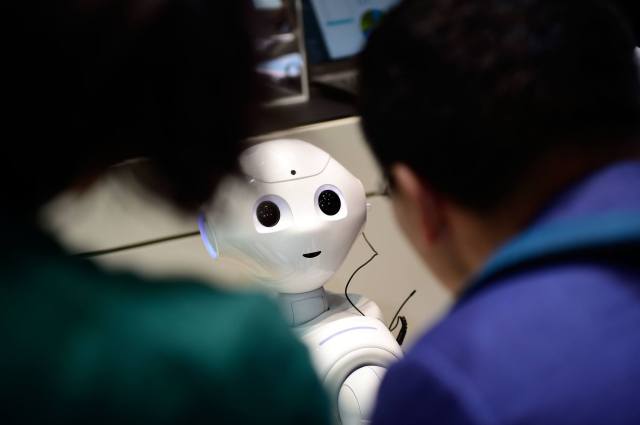

The robot “Pepper” speaks with visitors at the IBM stand at a technology trade fair (Credit: Alexander Koerner/Getty Images)

The danger that artificial intelligence poses to the future of humanity grabs the headlines, but we ignore one of the most significant issues of the 21st century. It’s called the re-engineering of humankind.

“In the end, it’s not about intelligent machines taking over,” says Brett Frischmann, co-author of Re-Engineering Humanity. “I don’t really care about the engineering of artificial intelligence or really intelligent machines. I’m more concerned about the engineering of unintelligent humans.”

Like an episode of Black Mirror, the story that he and Evan Selinger tell in their rather dystopian book begins in small, seemingly insignificant ways.

When I try to login to a particular newspaper I am usually confronted by a picture of a roadside scene. Over the image is a grid. I am then challenged to prove that I am human by clicking on each box that contains parts of a crosswalk, which I complete with only a grumble, more about the Americanism rather than anything else. I don’t for one minute think about what the data I am generating is being used for. When I have done this, I am presented with a second picture. It’s only when this task has been completed that I can read the story that I am paying a fee every month to access.

Sound familiar? This challenge is, of course, a CAPTCHA. It stands for Completely Automated Public Turing test to tell Computers and Humans Apart. Ironically, the original Turing test was designed by British computer scientist Alan Turing to be administered by humans to robots as a test of a machine’s ability to exhibit human-like behaviour. In the case of CAPTCHA, it is a machine that is testing a human’s ability to exhibit human like capabilities. In a sense it is testing the mechanisation of humans.

This automated stimulus-response test would be familiar to any psychologist who has watched rats scurry around a maze. At least the rats get a piece of cheese for their trouble.

The problem for us humans is that we don’t react in this automated manner only when faced by a CAPTCHA, but to a whole range of other software too. The automatic tick of a box – whether literal or metaphorical – becomes our default, predictable response when faced by choices where we should be paying more attention to the decisions we are making.

We automatically tick the box to agree to Terms and Conditions online without ever reading what they say. We like the piece of Fake News on Facebook without even a quick Google to check out the story. We strap a Fitbit on to our wrist and strive to meet the 10,000 steps a day goal while our private data gets hoovered up to who knows where and we get used to being under constant surveillance. We change our coffee brand so we can have the pleasure of re-ordering it though Alexa, the virtual assistant that works through the Amazon Echo smart speaker – the use of which seems to infect families “like a happy virus.”

The result of this conditioning is that we become more like a simple machine – or a reluctant piece of technology – that can be nudged, shoved, or even programmed, by the coders of the apps and their corporate masters towards the goal that has been chosen for us. Many people just trust that Big Tech is looking after our interests rather than its own, or simply shrug their shoulders and enjoy the benefits of the app or smartwatch anyway.

The problem is that we are so enjoying the happiness, or “cheap bliss” – as Frischmann and Selinger call it – released by our freedom from these mundane tasks of reading a contract, shopping for coffee or recording our exercise that we don’t notice that the technology is starting to blur the line between who is the tool and who is the maker. It doesn’t occur to us that “the things that we use aren’t neutral”.

The voices that warn us are drowned out or dismissed. When we do notice, we become so tied up with the swirl of controversy around a company like Cambridge Analytica, a platform like Facebook or even just social networks in general that we don’t notice that the problem is the much bigger system.

CAPTCHA, Fitbit and Alexa are all part of a network of smart digital technologies that may have different features and different effects on us, but which are together enmeshing every aspect of our lives. Even if we do realise the power of the Internet of Things, it is easier to close our eyes and give ourselves up to the technological momentum.

The fear that we will become like our tools isn’t new – Max Weber famously argued that the drive for efficiency by large bureaucracies was dehumanising work – but for Frischmann and Selinger never has this outcome been so likely, nor the consequences so profound. The cubicles of the typical open-plan office can make us feel like worker ants, but that distinctly steam-age form of dehumanisation is nothing compared to the digital variety – the former clocks off at 5pm, the latter sits on your bedside table. The two writers insist they are not anti-technology, more cautious about its likely outcomes.

The impact of this network of powerful smart devices driven by algorithms designed to meet – and even anticipate – our needs cannot be understated. These little plastic boxes seem to quickly, and sinisterly, infiltrate every aspect of our lives, even the way we bring up our children. We think we are training the algorithms, when really we are the ones being trained. By the time we think “how could I have ever lived without it?”, we have moved a couple more steps closer to losing our humanity.

Frischmann and Selinger call this process “outsourcing”. Outsourcing, they argue, attacks what it is be human. When we let algorithms, robots and consumer devices take over what were once fundamental human functions – like making a decision – we lose our free will, our ability to act independently in the world. With less effort needed by us, we experience less of the action itself, and before long the behaviour becomes less intentional, and we do it more automatically.

By giving up control like this, we feel less responsible for how things turn out and conversely feel less of a buzz when they turn out well. Any understanding we may have had of how a process works is lost. We become dependent on the platform or app, a spectator in a life programmed by our devices, the engineers who programmed them and the corporations that own the lot.

The venture capital backed June Intelligent Oven is a case in point. It runs on AI, deep learning and many, many sensors and cameras. Needless to say, Alexa is built-in. The second-generation of the oven, whose manufacturers compare to the Tesla Model-S, has just been released and even has food recognition technology built in. The drop in price from $1500 to $500 means it is likely to be in your kitchen soon.

The only problem is, as one reviewer points out, we might lose our ability to ever cook again: “When you cook salmon wrong, you learn about cooking it right. When the June cooks salmon wrong, its findings are uploaded, aggregated and averaged into a June database that you hope will allow all June ovens to get it right next time.”

Like its corporate-world namesake, we justify outsourcing to ourselves, our friends and our children in the name of efficiency. These smart networks reduce the transaction cost of doing something to close to zero. This reduction means that stuff happens fast and gives us what feels like a big boost to our productivity. The only problem is that going to the gym or spending time with the children can start to feel like going to work. It is Taylorism gone mad. (Taylorism was the first attempt to apply science to improve labour efficiency.)

We can’t win. The cheap bliss that the automatic use of these devices gives us is manufactured by the same people who programme our lives. Happiness, satiation, call it what you like, is a feeling that is itself engineered through techno-social engineering. We can’t escape it. The danger that the authors of Re-Engineering Humanity highlight is that the most efficient way to keep billions of people might be to engineer their preferences to expect very little and then give them only slightly more than that.

Resistance is possible, the book tells us, perhaps with a little less confidence. To counter the outsourcing of our humanity, we need to consciously understand that we are shaped – even manufactured – by the built environment we live in whether a tower block or the Internet of Things.

Like the resident action groups who take responsibility for the estates that they live on, we need to start taking responsibility for the design and use of our digital ‘estate’. The best way to do this, the authors propose, may be to engineer delays back into our systems.

“Friction is resistance,” Frischmann thinks, because it gives us time to stop and think. Time that allows us to acquire our own beliefs, values and attitudes rather than an algorithm. “This is free will in action.”

If we don’t start to take back control, then the danger is that we lose what the writers call “our freedom to be off. Our freedom from programming, conditioning and control engineered by others.”

Join the discussion

Join like minded readers that support our journalism by becoming a paid subscriber

To join the discussion in the comments, become a paid subscriber.

Join like minded readers that support our journalism, read unlimited articles and enjoy other subscriber-only benefits.

Subscribe