Truth lies in fiction is the pleasing paradoxical assertion that justifies my avoidance of dreary books about politics, or those suddenly-fashionable works about how to think better. But truth doesn’t exist only between the pages of a well-written novel. Its shadowy form can sometimes be discerned flickering on the walls of the cave inhabited by the experimental sciences.

Consider this very modern problem: I have given a test drug to 20 patients, and 15 of them responded positively. That’s a 75% ‘response rate’, in the jargon, in this sample of patients.

But common sense will tell you that 75% isn’t the drug’s true response rate (would you expect exactly 15 patients to respond out of every further 20 who were exposed to the treatment? Or sometimes 14, sometimes 18, and so on?) So: the observed response rate isn’t the truth. But – and again, applying merely to common sense, and with the supposition that I didn’t rig the trial in some way – surely a response rate of 75% is more probably the true response rate than, say, 25%?

To peer at the truth about a theory concerning the world (in this case, the true response rate of the drug) usually requires an act of inductive inference: from experimental observation (“We observed a 75% response rate in this study”), to statement about the truth (“The true response rate is more likely to lie between 50% and 90% than it is to lie below 10%”). You’ll never know the truth (shades of Jack Nicholson) – you can’t expose every possible patient throughout the Earth’s history to the drug, and count how many of that infinite set would respond – yet you can still make valid claims about it. Roll that thought about your head a moment, and then tell me Statistics isn’t sexy.

That there exists an inductive logic that more or less works is thanks in part to the Reverend Thomas Bayes, whose simple inquiry in the 18th century provides the answer to what I called a “very modern problem”.

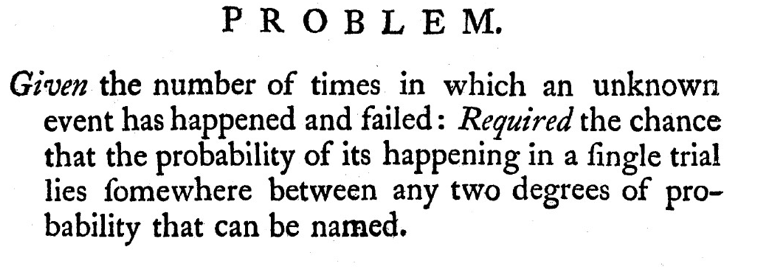

Here’s his version, posthumously published by his friend Richard Price, in 1763:

Other than f-for-s, this is essentially the “what is the probability that the true response rate for this drug lies between 50% and 90%, given that we’ve observed a 75% response in this trial?” question with which we opened.

Bayes answers like this: we have some hypothesis of interest; in this case, perhaps it’s “The true response rate for this drug is higher than 50%”. Call that hypothesis H. Before the trial, I must have some positive belief that H is true: call that Pr(H). Pr(H) must lie between zero (H is impossible), and 1 (H is certainly true) (and if Pr(H) didn’t lie between zero and one, there could be no justification for the experiment).

After the trial, the evidence – that 15 out of 20 patients responded – must alter that belief to an extent warranted by the observed results (else why do any experimental science at all?). “All” Bayes’ theorem makes plain is how you should modulate your pre-trial belief, Pr(H), into your post-trial belief, Pr(H, given that 15 out of 20 patients responded). Mathematically, it’s almost trivial. Inferentially and scientifically, it’s a revolution.

Almost every bit of tech you use pivots on his theorem – every Google auto-complete, not to mention the search algorithms themselves; Turing’s work at Bletchley; astronomical investigations of far-flung galaxies; medical imaging; genetic markers for disease predictions; nearly everything with the modern label “Artificial Intelligence/Machine Learning”: all of these and thousands more exercises in human ingenuity make use, explicitly or otherwise, of the theorem which carries his name.

In one of those dead-ends to which civilisation can sometimes be prone, however, early 20th-century Statistics turned its face against Bayes: theories are either true, or false, and so to discuss them in terms of probability – worse, in terms of “your” probability – was an unwarranted intrusion of the subjective – of the self – into science, which (as every fule kno) is supposed to be entirely objective.

Ronald Fisher, geneticist and statistician – a genius, a giant, but occasionally totally wrong – wrote Bayes out of the statistical universe, by inventing most of the methods that are taught to undergraduate scientists to this day, all of which pivot on “falsificationist” notions. As Popper held a theory to be scientific only to the extent to which an experiment could prove it false, so Fisher attempted an inductive logic by which data could “reject” a hypothesis.

Statisticians were aware of the limits of Fisher’s pseudo-objective approach (it often doesn’t work, it’s incoherent and, ironically, it is also unavoidably, enormously subjective), but continued to teach it – in four undergraduate years at the University of Glasgow, I received nine hours of Bayesian lectures, against heavens know how many hundreds of hours on ideas that flowed from Fisher.

I don’t have space to deal with Fisher’s issues here; suffice to say that Bayesian subjectivity isn’t an excuse for the post-modern relativism which is destroying the social sciences and nor does it imply that “there’s no such thing as the truth”. The biggest roadblock to Bayes wasn’t philosophical, but mechanical: for all but the simplest problems, the maths required to make the Bayesian machine work in practice is too “hard”.

And then the second Bayesian revolution happened. I started my PhD research just as that revolution picked up pace, in the early 1990s, with the “Gibbs sampler” publication of Gelfand and Smith. I’m blessed now to count among my direct colleagues (and friends) Professor Nicky Best (this year honoured by the Royal Statistical Society) who, along with coworkers David Lunn, Christopher Jackson, Andrew Thomas and the brilliant David Spiegelhalter invented BUGS: Bayesian inference using the Gibbs Sampler.

Gibbs sampling allows the statistician, no matter how complex his or her mathematical model – his or her representation of the theory under investigation – to draw simulated observations from that model’s idea of the world and to use those simulations for valid inference.

“Given this noisy, blurred MRI scan of your brain: what is the representation of your actual brain that has highest probability? Which areas are most likely affected by stroke?” – my PhD questions were identical, mutatis mutandis, to Thomas’s 1763 “PROBLEM”, and in theory could be answered by application of his theorem. Prior to Gibbs, however, this was practically impossible: afterwards, it was (almost) trivial. Suddenly, every scientific problem that had a perfect Bayesian solution in theory almost always had nearly perfect Bayesian approximate solutions in practice.

Statistical science was rescued from its obscurity not by Fisher’s dead-end significance tests, but from a mechanical solution that made Bayes’ 1763 theorem relevant and applicable in almost any setting. Without doubt, the portrait for the new 50-pound note should be of Thomas Bayes, a truly Great Briton.

Two highly readable books by philosopher Ian Hacking also shaped my understanding of how, in his words, the “quiet statisticians have changed our world”: The Taming of Chance explains how the concept of statistical “laws” came to rival those of the physical world in terms of their impact on human affairs. And An Introduction to Probability and Inductive Logic is a great exposition of probability and the ideas of both Fisher and Bayes (and others). Probability, the language of uncertainty, is the thing that everyone (including undergraduate statisticians) thinks they “get”, until they think about it carefully and realise they don’t.

If you’d like to read more, Howson and Urbach’s Scientific Reasoning is magisterial. In the same year I wasn’t being taught Bayesian methods by Glasgow University’s statisticians, Peter Urbach’s partner, who headed the Drama department, gave me a copy of this book, and it changed my life. I recommend it to everyone; Howson and Urbach were philosophers of science, not statisticians, and it’s very readable. For completely un-mathematical friends I’ve been recommending Thinking Statistically by Uri Bram.

Join the discussion

Join like minded readers that support our journalism by becoming a paid subscriber

To join the discussion in the comments, become a paid subscriber.

Join like minded readers that support our journalism, read unlimited articles and enjoy other subscriber-only benefits.

Subscribe