Scientific data-mining is contaminating serious study

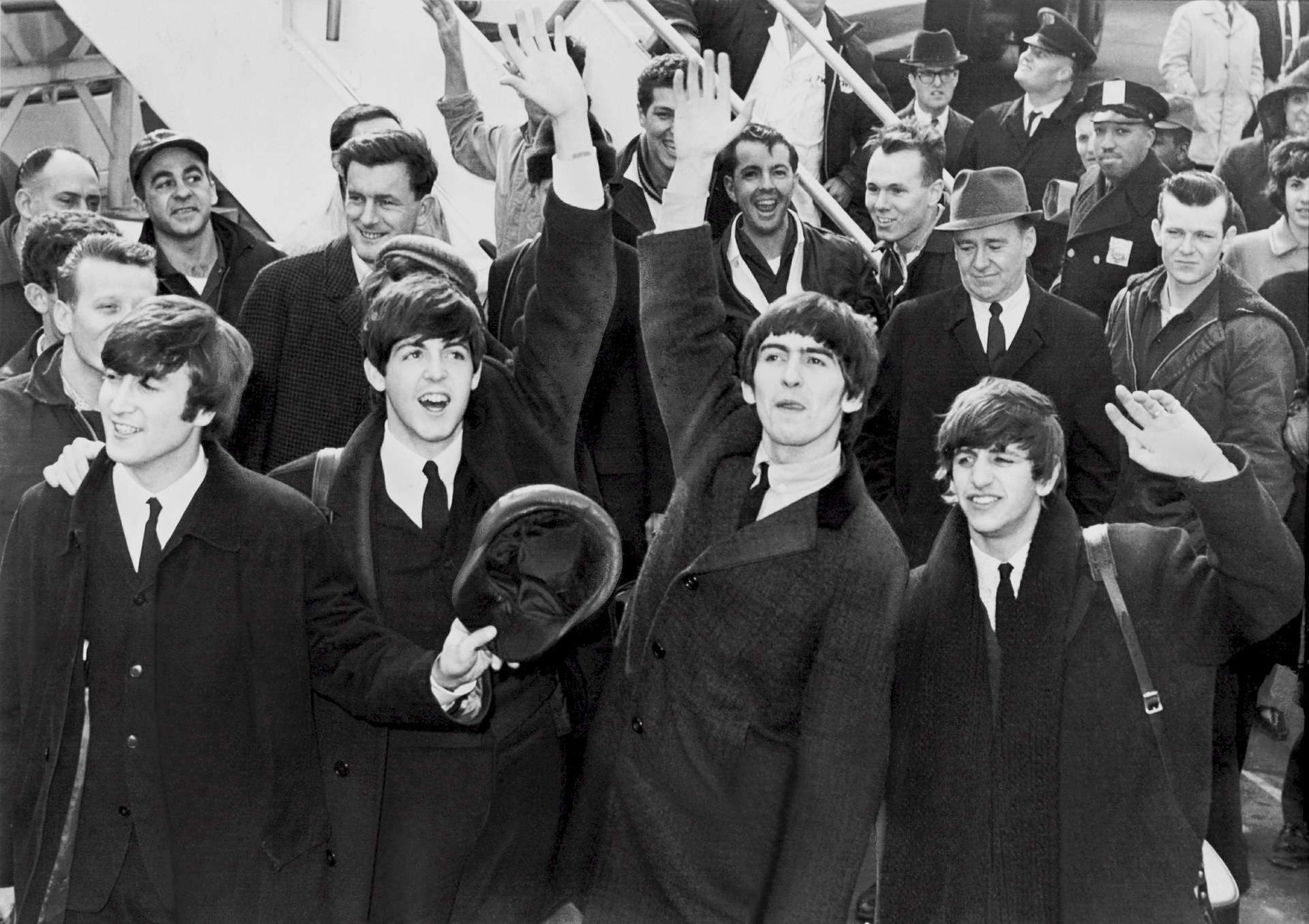

You may not have heard of the most surprising scientific result of all time. It was published in the journal Psychological Science[1. ‘False-Positive Psychology: Undisclosed Flexibility in Data Collection and Analysis Allows Presenting Anything as Significant’, 2011. Joseph P. Simmons, Leif D. Nelson, and Uri Simonsohn], and it found that listening to ‘When I’m 64’ by the Beatles literally made you younger by nearly 17 months.

Not “made you feel younger”, or “made your brain younger”: the study demonstrated that listening to certain songs made you younger. And these surprising findings met the standard of “statistical significance” which most scientific journals demand.

In fact, the three authors of the study had set out to deliberately make a point. They wanted to show that, by using routine statistical methods[2. Scott Alexander, ‘Two dark side statistics papers‘, Slate Star Codex, 2 January 2014], you could find apparently solid results that were not merely unlikely but patently absurd.

The problem is that the standard of “statistical significance” is too easily subverted. And to prove it, the three authors used tricks to find relevant-looking patterns out of pure, random fluke results. We might call their techniques “cheating”, but they are routinely used in almost all walks of science.

Say you’re running an experiment into whether or not a coin is biased towards heads. You flip the coin three times; it comes up heads three times. Does that prove your hypothesis? No: sometimes you get three heads in a row just by chance.

That’d be called a “false positive”. Your chance of getting three heads in a row on a fair coin is 1 in 8, or as a scientist would write it, p=0.125.

In 1925, the great statistician RA Fisher arbitrarily decided[3. Statistical Methods for Research Workers, RA Fisher, 1925] that “significance” should be defined as p=0.05, or a 1 in 20 chance of a false positive. That definition is the standard that most research is still held to. Your three heads in a row wouldn’t do it, but five would: that’d be a 1 in 32 chance, or p<0.03.

The “When I’m 64” study had p=0.04, and thus met the threshold.

To prove the thesis, they did a simple test: they took 20 subjects, randomly divided them into two groups, and then played one group the Beatles and one group a control song, “Kalimba” by Mr Scruff. In theory, randomly dividing the two group means that they should be, on average, the same; the only difference should be the intervention, which song they listened to. And that means that, in theory, if you find a significant difference between the two groups, it has to be caused by that intervention. It’s a randomised controlled trial, just the same as is used to test cancer drugs.

And the study found that the “When I’m 64” group was, on average, 1.4 years younger than the control group, and found that this difference was statistically significant. So they had proved – to the standard most journals demand – that “When I’m 64” causes you to get younger.

Essentially, they gave themselves lots of chances to get that 1 in 20 fluke, then hid all their failed attempts at getting five heads in a row.

For instance, as well as making their 20 participants listen to the Beatles and Mr Scruff, they also asked them to listen to “Hot Potato” by the Wiggles. And they also asked 11 other questions, including how old they felt, their political orientation, whether they referred to the past as “the good old days”, and so on.

This allowed them to look at lots of different possibilities which might demonstrate their conclusion. Did the people who listened to “Hot Potato” happen, by chance, to be more politically conservative? Did people who listened to the Beatles say “the good old days” more often? No? Then the scientists didn’t report on that. There were dozens of ways to analyse the data, easily enough to get a 1-in-20 result just by fluke.

This behaviour is called “hypothesising after results are known”, or HARKing[4. Norbert L. Kerr, ‘HARKing: Hypothesizing after the results are known‘, Personality and Social Psychology Review, 1 August, 1998]. It means waiting until you’ve got your data, then sifting through it in imaginative ways until you find something that looks like a result. It gives you a much higher chance of (falsely) reaching a “significant” finding: using tricks like this, collectively known as “p-hacking”, Simmons, Nelson and Simonsohn say that the chances of a false positive can rise above 60%.

And this happens all the time, in real papers. A study by the Oxford University Centre for Evidence-Based Medicine[3. The Centre for Evidence Based Medicine Outcome Monitoring Project (COMPare), Ben Goldacre, Henry Drysdale, Carl Heneghan, 18 May, 2016] found that the top five medical journals in the world regularly publish studies which change what they’re measuring after the trial begins, a practice related to HARKing.

Chris Chambers[4. Chris Chambers, ‘Psychology’s registration revolution‘, The Guardian], a professor of psychology at Cardiff, tweeted recently that he’d had a paper rejected for having conclusions that were “not necessarily novel”.

Short thread. Had a paper accepted today at @royalsociety Open Sci & another rejected at Appetite.

Here’s some review comments from Appetite, not as a whinge (I’m too old & leathery to complain about reviews) but as a reminder of the challenge we face reforming the field /1

— Chris Chambers (@chrisdc77) January 11, 2018

“We could have been more novel,” he wrote. “We could have HARKed this paper up the wazoo or p-hacked it. We didn’t.”

The trouble is a deep-seated flaw in the process of “peer review”. “They judge the work,” Chambers said, “not just on how important the question is and how well the study is designed, but also whether the results are novel and exciting.”

This leads to a fundamental problem.When you select what to publish based on results, the knowledge base only reflects some of the truth. According to Chambers: “Positive studies get into journals but equally high-quality studies that don’t show results are basically censored.”

This sort of “publication bias” is well-documented [3. Ikhlaaq Ahmed, Alexander J Sutton, Richard D Riley, ‘Assessment of publication bias, selection bias, and unavailable data in meta-analyses using individual participant data: a database survey‘, BMJ 3 January 2012.]. “Publication bias is catastrophic for medicine,” says Chambers. It also has profound implications for government policy: in the US, the federal government set up a $22 million (£16 million) programme called “Smarter Lunchrooms” on the basis of studies that contained flawed or missing data, several of which have since been retracted or corrected.

Luckily, there are people trying to do things about it, such as ensuring the preregistration of hypotheses, to prevent HARKing. Chambers is the founder of an initiative called Registered Reports: journals who sign up for it review a study on the basis of the study methods alone, and agree to publish regardless of the results.

This would take publication bias out of the equation and equally removes the incentives for researchers to data mine for significant findings, because the novelty or otherwise of the results is unimportant.

Such initiatives won’t make science perfect. But they almost certainly would improve things by aligning scientists’ incentives with those of society. They would stop fruitless research, prevent ineffective policy incentives and encourage straight-forward scientific innovation. Our lives would be made measurably better.

Join the discussion

Join like minded readers that support our journalism by becoming a paid subscriber

To join the discussion in the comments, become a paid subscriber.

Join like minded readers that support our journalism, read unlimited articles and enjoy other subscriber-only benefits.

Subscribe