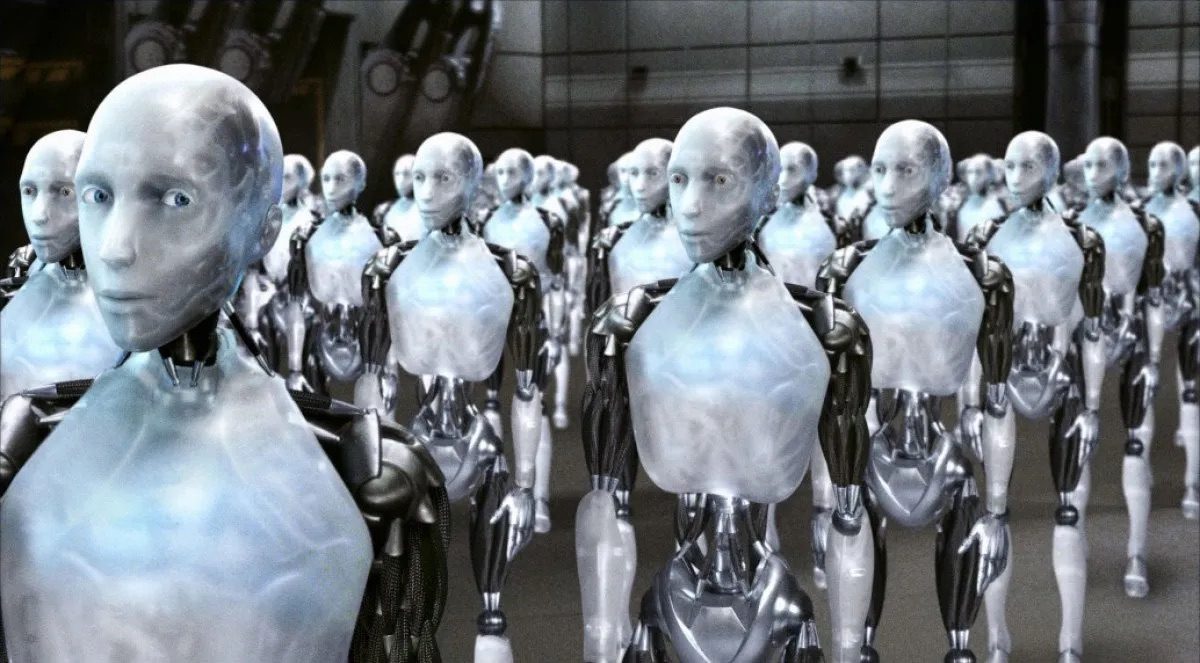

Last year, confidence in artificial intelligence plummeted. This week, it roared back, expressed in viral posts and ever more apocalyptic predictions of doom. But how much of this is synthetic, a last-ditch effort to forestall the bursting of a bubble? How much can be explained by social contagion, what AI researcher François Chollet describes as a “mass psychosis”?

The reason for the collapse in enthusiasm last year was that business, which had been conducting pilot projects with large language models (LLMs) for 18 months, wasn’t impressed with what it saw. An MIT study found that 95% of pilot projects saw no positive return on investment. Agentic AI, which means trusting an LLM to carry out consequential business operations, failed 70% of the time.

Microsoft is seeing a 3.3% conversion rate from free to paid. Lloyds has begun offering insurance coverage for “unforeseen performance issues” arising from the use of “AI-driven products and operations”. All this customer indifference does not justify high valuations or the capital expenditure, estimated to be $3 trillion, on new data centers. The underwhelming release of OpenAI’s ChatGPT-5 has also dented the proposition that capabilities would improve significantly, let alone exponentially. Scaling no longer guaranteed improvements. The lack of real demand had been camouflaged by circular financing arrangements. By October, OpenAI was hinting it might need government bail-outs, as its work was so strategically important. It had become “too big to fail”.

However, when it comes to AI, the day of reckoning is forever postponed. As with QAnon, not only do enthusiasts fervently want to believe in a dramatic transformation, they also need to have their faith constantly reaffirmed. This week, startup founder Matt Shumer, who has a history of making dubious AI claims, wrote a post called “Something Big is Happening”, receiving 70 million page views. It’s a classic of the “Wowslop” genre, prompting a classic social contagion or psychosis.

But not everything here is spontaneous or organic. Faced with gigantic expenditure not matched by revenue, AI companies including Meta, OpenAI and Microsoft are paying influencers sums of up to $600,000 to promote their products.

The largest sponsor of the idea that an epochal transformative is close at hand comes not from the AI companies’ marketing departments, but instead from figures and organizations aligned with the Effective Altruism movement. EAs single-handedly created the field of “AI safety”, focusing on hypothetical, science-fiction scenarios of existential risk rather than the documented problems, such as giving dangerous medical advice, or psychological harm, such as chatbots persuading teenagers to commit suicide.

Researcher Nirith Weiss-Blatt, who maintains the AI Panic Substack, estimated in 2023 that $500 million had been donated to grow the field — essentially creating an expensive work of fiction. That figure has since doubled. EAs have gone on hunger strike in London. The AI-obsessed Zizian terror group — a cult within a cult — included former Google employees, coalesced from EA circles. This week, Mrinak Sharma, a prominent EA figure, resigned from AI company Anthropic after warning that “the world is in peril” from a killer AI, gaining over 13 million views.

Doom is back, and this emphasis inevitably influences the public perception of AI as rather more capable than it is, allowing Effective Altruists to hold sway over policymakers. This discourages skepticism: IBM surveyed 2,000 of its customers last year and most invested in AI not because they expected productivity gains, but because they didn’t want to miss out on the gold rush. It’s difficult not to conclude that with AI now, the illusion is actually the product.

Join the discussion

Join like minded readers that support our journalism by becoming a paid subscriber

To join the discussion in the comments, become a paid subscriber.

Join like minded readers that support our journalism, read unlimited articles and enjoy other subscriber-only benefits.

Subscribe