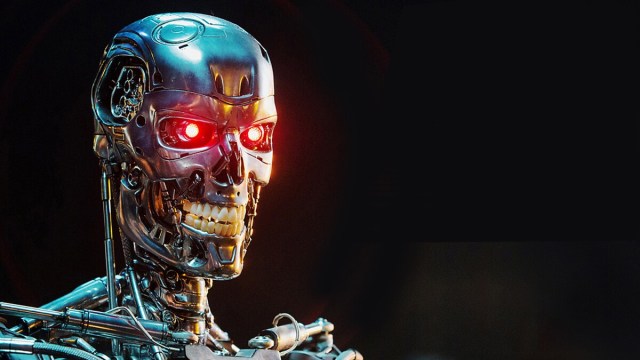

It’s not the end of the world (Terminator)

When it comes to whipping up AI hysteria, there is a tried-and-tested algorithm — or at least a formula. First, find an inventor or “entrepreneur” behind some “ground-breaking” AI technology. Then, get them to say how “dangerous” and “risky” their software is. Bonus points if you get them to do so in an open letter signed by dozens of fellow “distinguished experts”.

The gold standard for this approach appeared to be set in March, when Elon Musk, Apple co-founder Steve Wozniak and 1,800 concerned researchers signed a letter calling for AI development to be paused. This week, however, 350 scientists — including Geoffrey Hinton, who effectively invented ChatGPT, and Demis Hassabis, founder of Google DeepMind — decided to up the ante. Artificial intelligence, they warned, “should be a global priority alongside other societal-scale risks such as pandemics and nuclear war”.

Does this mean you should add “death by ChatGPT” to your list of existential threats to humanity? Not quite. Although a number of prominent researchers are sounding the alarm, many are sceptical that its current state is anything close to human-like capabilities, let alone superhuman ones. We need to “calm down already”, says robotics expert Rodney Brooks, while Yann Le Cun — who shared Hinton’s Turing Prize — believes “those systems do not have anywhere close to human level intelligence”.

That isn’t to say that these machine-learning programs aren’t smart. Today’s infamous interfaces are capable of producing new material in response to prompts from users. The most popular — Google’s Bard and Open AI’s Chat GPT — achieve this by using “Large Language Models” (LLMs), which are trained on enormous amounts of human-generated text, much of it freely available on the Internet. By absorbing more examples than one human could read in a lifetime, refined and guided by human feedback, these generative programs produce highly plausible, human-like text responses.

Now, we know this enables it to provide useful answers to factual questions. But it can also produce false answers, fabricated background material and entertaining genre-crossing inventions. And this doesn’t necessarily make it any less threatening: it’s this human-like plausibility that leads us to ascribe to LLMs human-like qualities that they do not possess.

Deprived of a human world — or indeed any meaningful embodiment through which to interact with the world — LLMs also lack any foundation for understanding language in a humanlike sense. Their internal model is a many-dimensional map of probabilities, showing which word is more or less likely to follow the previous one. When, for example, Chat GPT answers a question about the location of Paris, it relies not on any direct experience of the world, but on accumulated data produced by humans.

What else do LLMs lack that human minds can claim? Perhaps most importantly, they lack the intention to be truthful. In fact, they lack intention at all. Humans use language for purposes, with intention, as part of games between human minds. We may intentionally lie in order to mislead, but that in itself is an attitude to the value of truth.

It’s this human regard for truth that inspires so much terror about the capacity of AI to produce plausible but untrue materials. Weapons of Mass Disinformation are the spectre stalking today’s internet, with deepfake videos or voice recordings serving as the warheads. Yet it’s hard to see this as a radically new problem. Humans already manage to propagate wild untruths using much simpler tools, and humans are also much better than is often recognised at being suitably sceptical. Mass distribution of false or misleading material has been shown to have little effect on elections. The breakdown of trust in media or authoritative information sources, and the splintering of belief in shared truths about the world, have deeper and more complex roots than technology.

Even the most fearful proponents of AI intellectual abilities don’t generally believe that LLMs can currently form goals or initiate actions without human instruction. But they do sometimes claim that LLMs can hold beliefs — the belief, for example, that Paris is in France.

In what sense, however, does an LLM believe that Paris is in France? Lacking a conception of the physical world to which abstract concepts such as “Paris” or “France” correspond, it cannot believe that “Paris is in France” is true in a way that “Frankfurt is in France” is false. Instead, it can only “believe” that data predicts “France” is the most probable completion of the string of words “Paris is in…”.

Imagine learning Ancient Greek by memorising the spellings of the most common 1,000 words and deducing the grammatical rules that govern how they may be combined. You could perhaps pass an exam by giving the most likely responses to questions similar to the ones in your textbook, but you would have no idea what the responses mean, let alone their significance in the history of European culture. That, broadly, is what an LLM can do, and why it’s been called a “stochastic parrot” — imitating human communication, one word at a time, without comprehension.

A stochastic parrot, of course, is very far from Skynet, the all-powerful sentient computer system from Terminator. What, then, has provoked the sudden panic about AI? The cynical, but perhaps most compelling, answer is that regulation of AI technology is currently on the table — and entrepreneurs are keen to show they are taking “risk” seriously, in the hope that they will look more trustworthy.

At present, the European Union is drafting an AI Act whose “risk-based approach” will limit predictive policing and use of real-time biometric identification in public. The US and EU are also drawing up a voluntary code of practice for generative AI, which will come into force long before the AI Act makes its way through the EU’s tortuous procedures. The UK government is taking the threat just as seriously: it published a White Paper on AI in March, and Rishi Sunak reportedly plans to push an “AI pact” when he meets Joe Biden next week. Regulation, it seems, is a matter of when, not if — and it’s clearly in the interests of developers and businessmen to make sure they have a seat at the table when it’s being drafted.

This isn’t to say that commercial interests are the sole driver of this week’s apocalyptic front pages; our fears about AI, and the media’s coverage of them, also reflect far deeper cultural preoccupations. Here, comparisons between human thought and AI are particularly revealing.

If you believe that human language is an expression of agency in a shared world of meaning, that each human mind is capable not only of subjective experience but of forming purposes and initiating new projects with other people, then prediction-generating machines such as ChatGPT are very far from humanlike. If, however, you believe that language is a structure of meaning in which we are incidental participants, that human minds are an emergent property of behaviours, over which we have less control than we like to believe, then it’s quite plausible that machines could be close to matching us on home turf. We already refer to their iterative process of adjusting statistical weights as “learning”.

In short, if your model of human beings is a behaviourist one, LLMs are plausibly close to being indistinguishable from humans in terms of language. That was, after all, the original Turing Test for artificial intelligence. Add to this our culture’s taste for Armageddon, for existential threats that sweep aside the messy reality of competing moral and practical values, long and short-term priorities, and pluralist visions of the future, and it’s no wonder the world-ending power of AI is hitting the headlines.

At an Institute of Philosophy conference this week, Geoffrey Hinton explained why he believes AI will soon match human capacities for thought: at heart, he is sceptical that our internal, subjective experience makes us special, or significantly different from machine-learning programs. I asked him whether he believed that AI could become afraid of humans, in the way he is afraid of AI. He answered that it would have good reason to be afraid of us, given our history as a species.

In the past, our gods reflected how we saw ourselves, our best qualities and our worst failings. Today, our mirror is the future AI of our imagination, in which we see ourselves as mere language machines, as a planet-infesting threat, as inveterate liars or gullible fools. And all the while, we continue to create machines whose powers would dazzle our grandparents. If only we could program them to generate some optimism about the future, and some belief in our own capacity to steer towards it.

Join the discussion

Join like minded readers that support our journalism by becoming a paid subscriber

To join the discussion in the comments, become a paid subscriber.

Join like minded readers that support our journalism, read unlimited articles and enjoy other subscriber-only benefits.

Subscribe