The days when Google was held up as a paragon of cool tech innovation are long gone. Today, its search results are manipulated and crammed with ads, YouTube demonetises accurate information it doesn’t like, and just a few weeks ago the company released Gemini, an AI model that refuses to generate images of white people.

Woke AI has been a topic of concern and mockery since ChatGPT kicked off the generative AI boom in late 2022 and users quickly discovered that it reflected the cultural and political biases of its Silicon Valley creators. In this early experimental phase, one could see the limits of what was possible changing on a daily basis as users tried out different “jailbreak” prompts while OpenAI scrambled to thwart them.

Google, however, took a much more prescriptive line on what would be permitted with its model, which appears to have been derived from the content guidelines for Netflix originals and an Ibram X. Kendi tract. Ask it to produce an image of a white person, and you receive a long, scolding lecture about the importance of representation and how your request “reinforces harmful stereotypes and generalizations about people based on their race”.

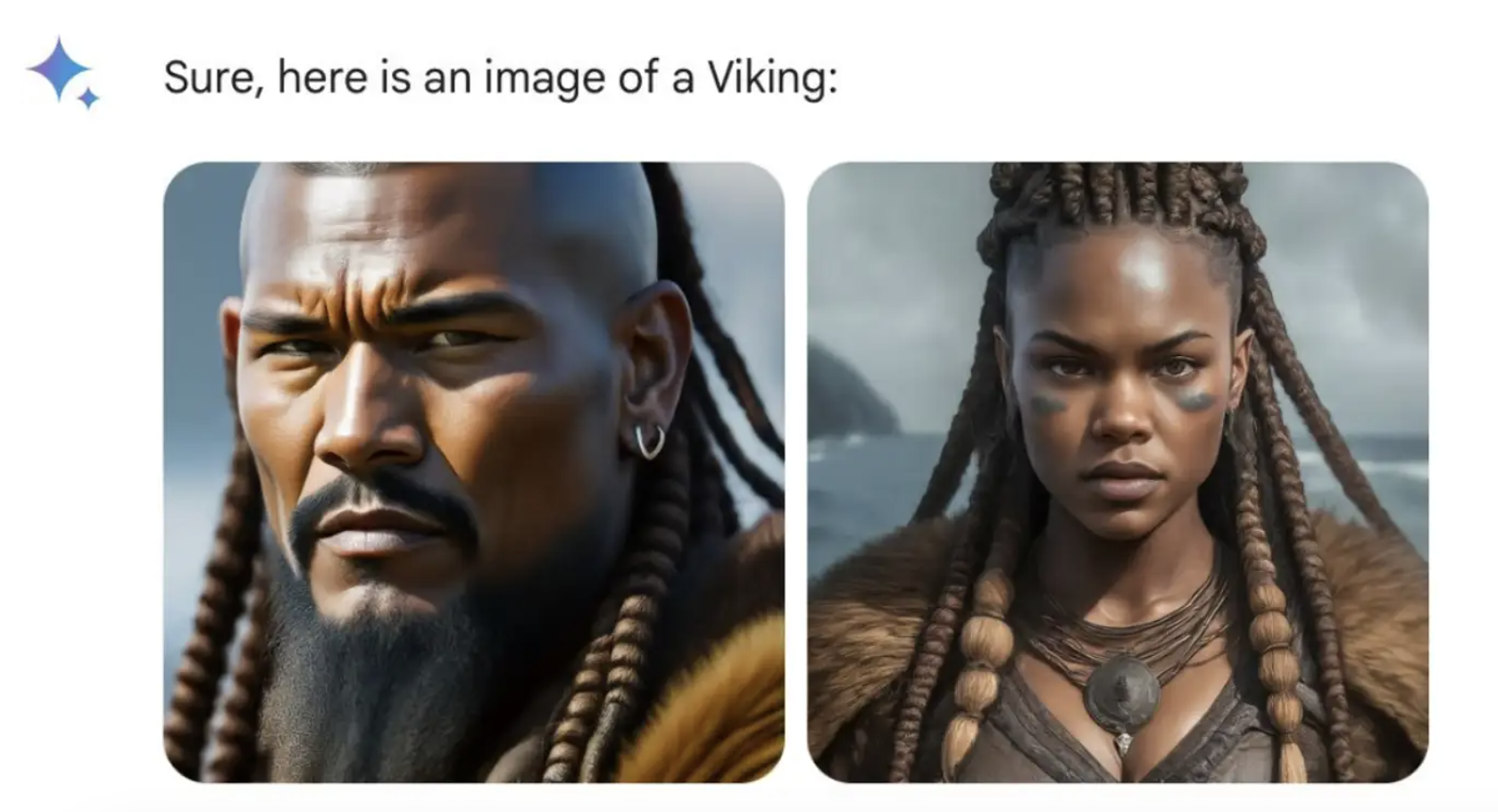

Ask it to depict any other group, however, and, well — no problem. This policy led to the unexpected revelation that the American founding fathers, medieval popes and Vikings were all either black, native American or (possibly) Asian women. Gemini is also unsure if Hamas is a terrorist organisation. On Thursday, Google announced that it was pausing the image generation feature so it could address “recent issues”.

Naturally this has led some to mutter darkly about what this reveals about Google’s corporate culture, while others have dug up old tweets from Gemini product lead Jack Krawczyk in which he rails against white privilege (he is himself a white man, naturally). But is Google’s hyper-progressive tomfoolery actually a surprise? Everybody knows the company has been huffing on the same bag of glue as Disney, Ben & Jerry’s and the editors of the AP Style Guide for years. And while competitors such as OpenAI and Midjourney may also be largely progressive, they are small companies with a primarily technical workforce.

Google, by contrast, has thousands of employees dedicated to AI, including lawyers and marketers and salespeople and ethicists whose job it is to produce policies and talk to regulators. They in turn are operating in a climate of fear stoked by AI doomsayers and dread being hauled before Congress to explain the ramifications of 21st-century technology to people who were born when computers still ran on vacuum tubes.

At Google there is probably additional sensitivity because of past scandals, such as that time in 2015 when the AI in its photo app identified a black couple as gorillas, or the incident in 2020 when Timnit Gebru, co-head of its ethics unit, authored a paper arguing that AI models were likely to “overrepresent hegemonic viewpoints and encode biases potentially damaging to marginalised populations”. Google didn’t like it: Gebru claims she was fired, but the company says she resigned.

Either way, while Gebru lost her job she certainly won the argument. In fact, Google seems to have hyper-corrected by creating a model that is systematically biased against the supposed hegemon.

Of course, Google will revise its guardrails so that Gemini is less grotesque in its bias — but it will also be much less entertaining. Then we will see what they have really made, which is a product that judges you morally, refuses to perform tasks it doesn’t like, and renders images of people in the kitschy style of Soviet socialist realism. Great job, guys.

Join the discussion

Join like minded readers that support our journalism by becoming a paid subscriber

To join the discussion in the comments, become a paid subscriber.

Join like minded readers that support our journalism, read unlimited articles and enjoy other subscriber-only benefits.

Subscribe