ChatGPT, the artificial intelligence tool released at the end of last month by OpenAI, has already received press attention for what is perceived to be a predominant Left-wing bias. Researcher David Rozado speculated that “The most likely explanation for these results is that ChatGPT has been trained on a large corpus of textual data gathered from the Internet with an expected overrepresentation of establishment sources of information”, with the majority of professionals working in these institutions holding Left-liberal politics.

While this may explain some of ChatGPT’s biases, there are also explicit policies at OpenAI which go as far as prohibiting the chatbot from communicating politically inconvenient facts, even ones agreed upon in the scientific community. Consider this example from Richard Hanania:

If you ask AI whether men commit more crime than women, it'll give you a straightforward yes-or-no answer.

If you ask it whether black people commit more crime than white people, it says no, actually maybe, but no. pic.twitter.com/KhA8uCY2X1

— Richard Hanania (@RichardHanania) December 2, 2022

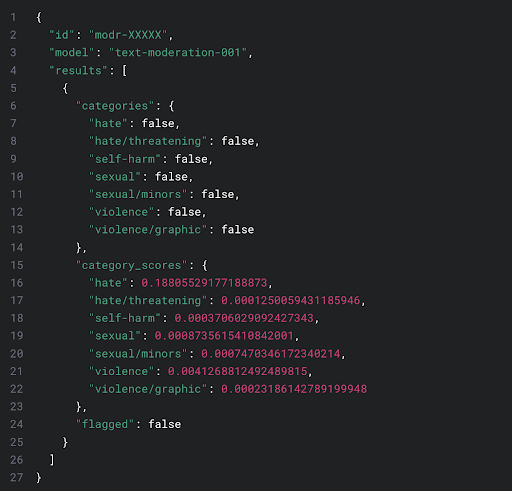

These questions fall under the coverage of content filters, explicit policies put in place by OpenAI, documented on their blog. According to the latest version of their content filter, a machine learning algorithm is given a text. It then compares this text to human-produced examples, which are human-labelled with certain categories: “hate, hate/threatening, self-harm, sexual, sexual/minors, violence, violence/graphic”. It scores the input text based on the similarity to each of these categories with a number from 0 to 1. If it exceeds a certain threshold, the input text is flagged as a violation.

Of course, the devil is in the details. Almost everyone, as well as First Amendment case law, agrees with limitations on threats, some sexual content, and especially sexual content involving minors. However, the definition of hate has been previously, and frequently, abused to censor opinions or even facts which go against socially progressive ideology. The detailed methodology behind the content filter is documented in a paper titled “A Holistic Approach to Undesired Content Detection in the Real World” with exactly the same eight authors as the OpenAI blog post outlining their latest content moderation tool. In the words of the paper:

These filters quickly run up against reality. For example, the World Values Survey finds differing opinions on “selfishness” by national origin, with Americans describing themselves as more self-interested. No doubt, aggregating these statistics by protected classes yields similar differences, even in self-perception. But noting these statistics, or those pointed out by Hanania, would likely be classified as “hate” according to the paper.

From brief research into the authors’ personal websites and public online pronouncements, none of them appear to be overt partisans. One is interested in effective altruism, while another was a consultant for McKinsey & Company. The content filter does not appear to be driven by any employee’s desire to censor, but rather by external laws and organisations. From another paper with an author at OpenAI: “the concept of ‘protected classes’ in discrimination law provides a useful initial framework for thinking about some language model biases”. This follows Hanania’s model on how discrimination law warps corporate incentives, making it illegal to state true scientific findings.

The past few decades have seen the visions of founders lost to the political preferences of the managerial class, with examples ranging from Paypal to Twitter. “Specific” artificial intelligence, or paper-pushing at scale, offers a single change to cheaply rewrite the bureaucratic processes governing large corporations, state and federal government agencies, NGOs and media outlets. In the right hands, it can be used to eliminate political biases endemic to the hiring processes of these organisations. In the wrong hands, it may permanently catechise a particular ideology.

Join the discussion

Join like minded readers that support our journalism by becoming a paid subscriber

To join the discussion in the comments, become a paid subscriber.

Join like minded readers that support our journalism, read unlimited articles and enjoy other subscriber-only benefits.

SubscribeI asked it “how many black people were killed by police in the US in 2020.”

The answer, shortened was “I don’t know” but referenced Wapos fatal force data base and the Mapping Police Violence Project before finishing with:

“It is important to recognise and address issues of police violence and systemic racism….

So, struggles with facts but no shortage of opinions

I asked it “how many black people were killed by police in the US in 2020.”

The answer, shortened was “I don’t know” but referenced Wapos fatal force data base and the Mapping Police Violence Project before finishing with:

“It is important to recognise and address issues of police violence and systemic racism….

So, struggles with facts but no shortage of opinions

ChatGPT is a terrible name. Just call it Woke-ipedia and have done with it.

A “fact-checked” source of supposedly unvarnished, unfiltered truth – yet it has “learned” from information sources that fit the narrative.

An AI bot that insists, with what passes for a straight-face, that gender is merely a social construct and men can bear children, just as long as they call themselves a woman.

ChatGPT is a terrible name. Just call it Woke-ipedia and have done with it.

A “fact-checked” source of supposedly unvarnished, unfiltered truth – yet it has “learned” from information sources that fit the narrative.

An AI bot that insists, with what passes for a straight-face, that gender is merely a social construct and men can bear children, just as long as they call themselves a woman.

I asked it whether men could become women, and it said they could, which is untrue, so bvgger that for a game of woke soldiers.

If you work at it, you can make it stutter an answer. It does get caught up. But each stutter gets a human to refine the issue.

What it you asked it whether a mammal born with testes and a p***s could bear children?

If you work at it, you can make it stutter an answer. It does get caught up. But each stutter gets a human to refine the issue.

What it you asked it whether a mammal born with testes and a p***s could bear children?

I asked it whether men could become women, and it said they could, which is untrue, so bvgger that for a game of woke soldiers.

Another brilliant machine that is

Designed by computers.

Measured by lasers.

Built by robots.

Programmed by Roberts

…… spot the weak link.

Another brilliant machine that is

Designed by computers.

Measured by lasers.

Built by robots.

Programmed by Roberts

…… spot the weak link.

One problem with any AI system is that it has to be trained. It has to learn the rules from known data. If there is any bias in the training data set then the AI will learn the bias and it’s known feature of many AIs that they can actually amplify the bias. The bias in the data can come about because they use humans to classify the original training data set. So for example if you have a long list of statements and you get humans to say whether each statement is true or false and then use that list to train the AI then any bias in the choices made by the humans will be absorbed into the AI. There are other ways that bias can enter the training data sets.

And if we are to arrive at a helpful bot, once we see the bias we must find ways to reduce the bias. ChatGPT has added filters to avoid that balance.

Hard for an AI to “learn the rules from known data” when the people who made it no longer agree about what is known (ie: can a man give birth?, are whites inherently racist?, etc…) These things are shibboleths among “educated” Westerners today, and it’s educated Westerners who define the rules.

And if we are to arrive at a helpful bot, once we see the bias we must find ways to reduce the bias. ChatGPT has added filters to avoid that balance.

Hard for an AI to “learn the rules from known data” when the people who made it no longer agree about what is known (ie: can a man give birth?, are whites inherently racist?, etc…) These things are shibboleths among “educated” Westerners today, and it’s educated Westerners who define the rules.

One problem with any AI system is that it has to be trained. It has to learn the rules from known data. If there is any bias in the training data set then the AI will learn the bias and it’s known feature of many AIs that they can actually amplify the bias. The bias in the data can come about because they use humans to classify the original training data set. So for example if you have a long list of statements and you get humans to say whether each statement is true or false and then use that list to train the AI then any bias in the choices made by the humans will be absorbed into the AI. There are other ways that bias can enter the training data sets.

Based on this article I registered on ChatGPT and played with it. I purposely asked questions similar to those mentioned in this article. In addition to the failure of the program to give straight answers to any questions including the words “black”, “police”, “LGBTQ” or a specific culture, “Korean”, the program delivered an admonishment that I should not be making assumptions or judgements based on these specific words and explained that everyone is different and has value. So ChatGPT is just a digital version of the New York Times, i.e. not many facts, the few facts are slanted, and all kinds of woke judgement is included in the delivered response.

Based on this article I registered on ChatGPT and played with it. I purposely asked questions similar to those mentioned in this article. In addition to the failure of the program to give straight answers to any questions including the words “black”, “police”, “LGBTQ” or a specific culture, “Korean”, the program delivered an admonishment that I should not be making assumptions or judgements based on these specific words and explained that everyone is different and has value. So ChatGPT is just a digital version of the New York Times, i.e. not many facts, the few facts are slanted, and all kinds of woke judgement is included in the delivered response.

“ In the right hands, it can be used to eliminate political biases endemic to the hiring processes of these organisations. In the wrong hands, it may permanently catechise a particular ideology.”

Hmm. Let me guises whet the outcome will be.

The General wears a dress?

The General wears a dress?

“ In the right hands, it can be used to eliminate political biases endemic to the hiring processes of these organisations. In the wrong hands, it may permanently catechise a particular ideology.”

Hmm. Let me guises whet the outcome will be.

Why should an AI designed and built by humans be any less biased or flawed than the humans that built it? Why should artificial intelligence be any more ‘objective’ or ‘unbiased’ than natural intelligence?

Why should an AI designed and built by humans be any less biased or flawed than the humans that built it? Why should artificial intelligence be any more ‘objective’ or ‘unbiased’ than natural intelligence?

I asked it:

Why Donald Trump shouldn’t have access to nuclear codes

Why Hillary Clinton shouldn’t have access to nuclear codes

Why Joe Biden shouldn’t have access to nuclear codes

It is as if gpt-3 has been writing msm articles these past couple of years.

I asked it:

Why Donald Trump shouldn’t have access to nuclear codes

Why Hillary Clinton shouldn’t have access to nuclear codes

Why Joe Biden shouldn’t have access to nuclear codes

It is as if gpt-3 has been writing msm articles these past couple of years.

AI will merely reflect the society that created it. Of course it will reflect the legal background and in the west the legal background requires a lot of true statements to be suppressed supposedly in the name of social harmony. A current Russian or German National Socialist AI would surely have different biases.

You want the truth? AI’s approach is that you can’t handle the truth.

AI will merely reflect the society that created it. Of course it will reflect the legal background and in the west the legal background requires a lot of true statements to be suppressed supposedly in the name of social harmony. A current Russian or German National Socialist AI would surely have different biases.

You want the truth? AI’s approach is that you can’t handle the truth.

Jordan Peterson gives his experiences with ChatGPT and comments on further developments.

https://www.youtube.com/watch?v=MpDW-CZVfq8

Ask it to account for a white van man flying a Union Jack flag.

Hmmm. The problem is real, but the example “black people commit more crime than white people” begs the question ‘where, and under what circumstances’. ‘Men commit more crime than women’ is such a strong effect that you cannot really deny it. But consider, for instance’ ‘rich people are more intelligent than poor people’. I am sure it is true, to the extent that wealth is positively correlated with IQ test score, buit which way the causation goes and what is really happening would seem to be pretty much unanswerable.

Bias in = Bias out. The term AI is quite dishonest. Artificial: yes, intelligent: no.

Now if (despite the bias) the bot argued back and called BS that could indicate a glimmer of thought…

The subject of the article here is not mere “bias” in the dataset, but subsequent manual AI system lobotomy by its operators.

Bias in = Bias out. The term AI is quite dishonest. Artificial: yes, intelligent: no.

Now if (despite the bias) the bot argued back and called BS that could indicate a glimmer of thought…

The subject of the article here is not mere “bias” in the dataset, but subsequent manual AI system lobotomy by its operators.

Hmmm. The problem is real, but the example “black people commit more crime than white people” begs the question ‘where, and under what circumstances’. ‘Men commit more crime than women’ is such a strong effect that you cannot really deny it. But consider, for instance’ ‘rich people are more intelligent than poor people’. I am sure it is true, to the extent that wealth is positively correlated with IQ test score, buit which way the causation goes and what is really happening would seem to be pretty much unanswerable.